TROS Gesture Detection

Application Scenarios

RDK Studio helps beginners get started quickly, enabling an efficient gesture recognition workflow: Gesture recognition algorithms integrate technologies such as hand keypoint detection and gesture analysis, allowing computers to interpret human gestures as corresponding commands. This enables functions like gesture control and sign language translation, mainly applied in smart home, smart cockpit, smart wearable devices, and other fields.

Preparation

Supports connection of both USB and MIPI cameras. This section uses a USB camera as an example. The USB camera connection method is as follows:

Running Process

-

Click Node-RED under the

TROS Gesture Detectionexample.

-

Enter the example application flow interface.

TipClick the

icon in the top right corner of RDK Studio to quickly open the example in a browser!

icon in the top right corner of RDK Studio to quickly open the example in a browser!

-

Select the type of connected camera, click the corresponding

Start(USB Cam)command. After waiting about 10 seconds, the visualization window will automatically open for recognition, and the recognized results will be broadcast via voice.

-

Performance Result: Click the debug icon on the right to position the right sidebar to the debug window, where you can view performance information output results.

-

Output Statistics: Outputs statistical results of collected gesture counts.

-

Click to execute the

Stopcommand to turn off the camera. Note

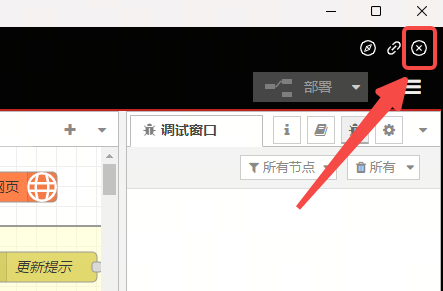

NoteIf you modify nodes, flows, etc., you need to click the

button in the top right corner for the changes to take effect!

button in the top right corner for the changes to take effect! -

Click the

×icon in the top right corner ,Select "Close APP" to exit the Node-RED application.

More Features

Visualization Page

-

Click to execute the

Visualization Interfacecommand, which automatically opens TogetherROS Web Display.

-

Click

Web Displayto enter the visualization page for real-time gesture recognition.

-

Click the

×in the top right corner of the visualization page to exit it.

Learn More

Click to execute the Learn More command to open the online documentation to find more information about the examples.