5.4.10 Vision & Speech Box

Overview

This section introduces how to experience the full pipeline of ASR + VLM/LLM + TTS on the RDK platform.

Code repository: (https://github.com/D-Robotics/hobot_llamacpp.git)

Supported Platforms

| Platform | OS/Environment | Example Feature |

|---|---|---|

| RDK X5 (4GB RAM) | Ubuntu 22.04 (Humble) | Vision & Speech Box |

Note: Only supported on RDK X5 with 4GB RAM.

Preparation

RDK Platform

- RDK must be the 4GB RAM version.

- RDK should be flashed with Ubuntu 22.04 system image.

- TogetheROS.Bot should be successfully installed on RDK.

- Install the ASR module for speech input with

apt install tros-humble-sensevoice-ros2.

Usage

On RDK Platform

-

You can use the Vision Language Model Vision Language Model

-

You can use the TTS tool Text-to-Speech

-

ASR tool should be installed

-

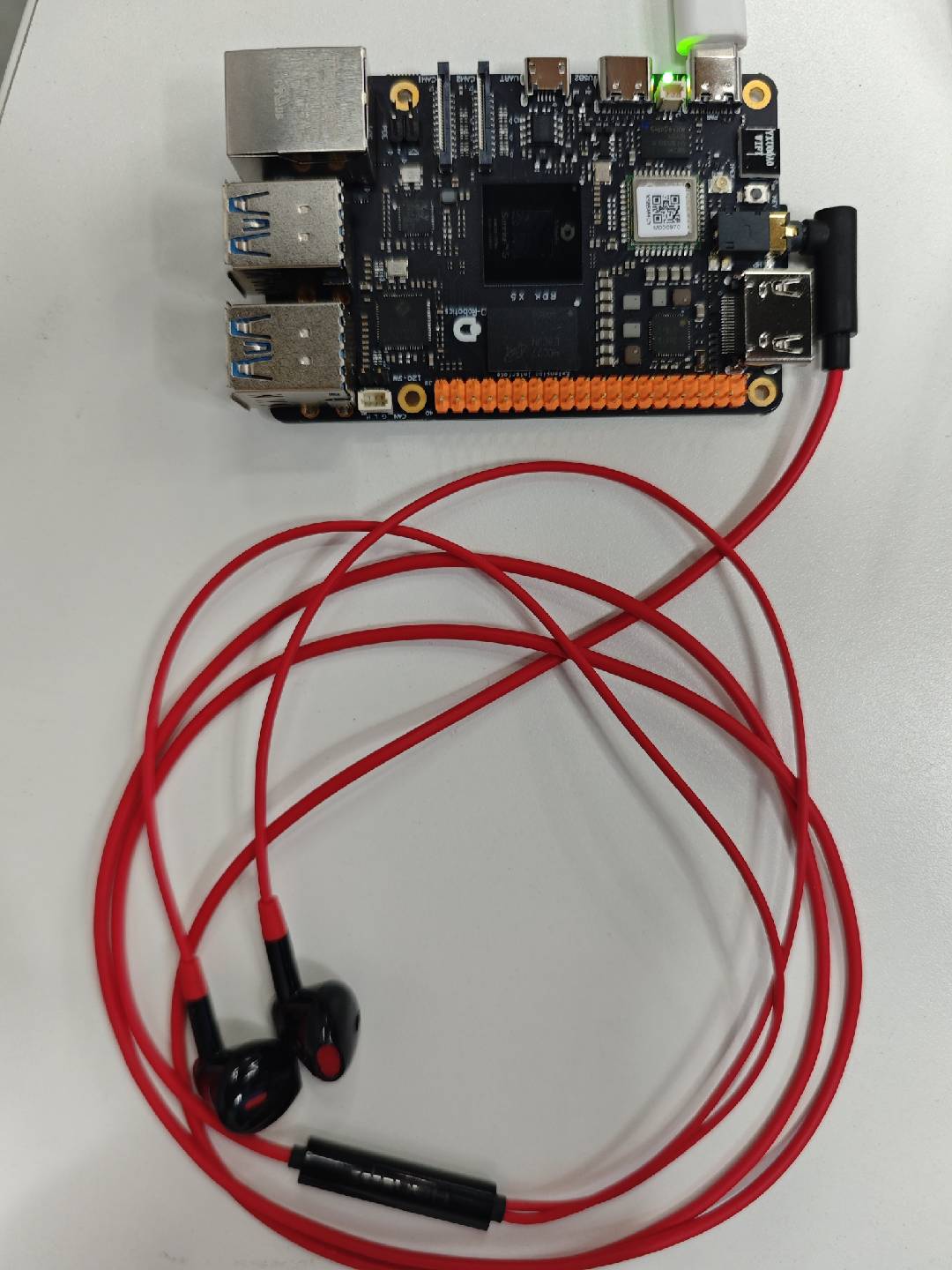

The RDK device has a 3.5mm headphone jack, and a wired headset should be plugged in. After plugging in, check if the audio device is detected:

root@ubuntu:~# ls /dev/snd/

by-path controlC1 pcmC1D0c pcmC1D0p timer

The audio device name should be "hw:1,0" as shown.

Instructions

# Configure tros.b environment

source /opt/tros/humble/setup.bash

Using MIPI Camera for Image Publishing

cp -r /opt/tros/${TROS_DISTRO}/lib/hobot_llamacpp/config/ .

# Set up MIPI camera

export CAM_TYPE=mipi

ros2 launch hobot_llamacpp llama_vlm.launch.py audio_device:=hw:1,0

Using USB Camera for Image Publishing

cp -r /opt/tros/${TROS_DISTRO}/lib/hobot_llamacpp/config/ .

# Set up USB camera

export CAM_TYPE=usb

ros2 launch hobot_llamacpp llama_vlm.launch.py audio_device:=hw:1,0

Using Local Images for Playback

cp -r /opt/tros/${TROS_DISTRO}/lib/hobot_llamacpp/config/ .

# Set up local image playback

export CAM_TYPE=fb

ros2 launch hobot_llamacpp llama_vlm.launch.py audio_device:=hw:1,0

After starting the program, you can interact with the device via voice prompts. Usage: Wake up the device by saying "Hello", then give it a task, such as "Please describe this image". After receiving the task, the device will reply "Okay", then wait for the device to finish inference and start outputting text.

Example flow:

-

User: "Hello, describe this image."

-

Device: "Okay, let me take a look."

-

Device: "This image shows xxx."

Advanced Features

Besides supporting vision-language large models, this package also supports using pure language models for conversation:

# Configure tros.b environment

source /opt/tros/humble/setup.bash

cp -r /opt/tros/${TROS_DISTRO}/lib/hobot_llamacpp/config/ .

ros2 launch hobot_llamacpp llama_llm.launch.py llamacpp_gguf_model_file_name:=Qwen2.5-0.5B-Instruct-Q4_0.gguf audio_device:=hw:1,0

After starting the program, you can interact with the device via voice prompts. Usage: After device initialization, it will say "I'm here"; wake up the device by saying "Hello", then give it a task, such as "How should I relax on weekends?". The device will start inference and output the response.

-

Device: "I'm here"

-

User: "Hello, how should I relax on weekends?"

-

Device: "Rest is important. You can read, listen to music, paint, or exercise."

Notes

-

About the ASR module: After ASR starts, even if the wake word is not detected, logs will be output to the serial port. You can speak to check if detection works. If not, check device status and number with

ls /dev/snd/. -

About wake word: Sometimes the "Hello" wake word may not be recognized, causing no output. If abnormal, check the logs for

[llama_cpp_node]: Recved string data: xxxto see if text is recognized. -

About audio devices: It is recommended to use the same device for recording and playback to avoid echo. If not, you can modify the device name by searching for

audio_devicein/opt/tros/${TROS_DISTRO}/share/hobot_llamacpp/launch/llama_vlm.launch.py. -

About model selection: Currently, the VLM model only supports the large model provided in this example. The LLM model supports inference using GGUF-converted models from https://huggingface.co/models?search=GGUF.