Development Guide

Requirements for Floating Point Model

symbolic_trace

Similar to PyTorch's quantization training, horizon_plugin_pytorch is designed and developed based on fx, so it requires that the floating-point model can correctly complete symbolic_trace.

Partial Operator Support Only

Since BPU only supports a limited number of operators, horizon_plugin_pytorch only supports the operators in the operator list and the special operators defined internally based on BPU restrictions.

Calibration Guide

In quantization, an important step is to determine the quantization parameters. Reasonable initial quantization parameters can significantly improve model accuracy and accelerate model convergence. Calibration is the process of inserting an Observer into a floating-point model and using a small amount of training data to statistically measure the data distribution at various points in the model's forward process to determine reasonable quantization parameters. Although quantization training can be done without calibration, it is generally beneficial to quantization training, so it is recommended that users consider this step as a mandatory option.

Process and Example

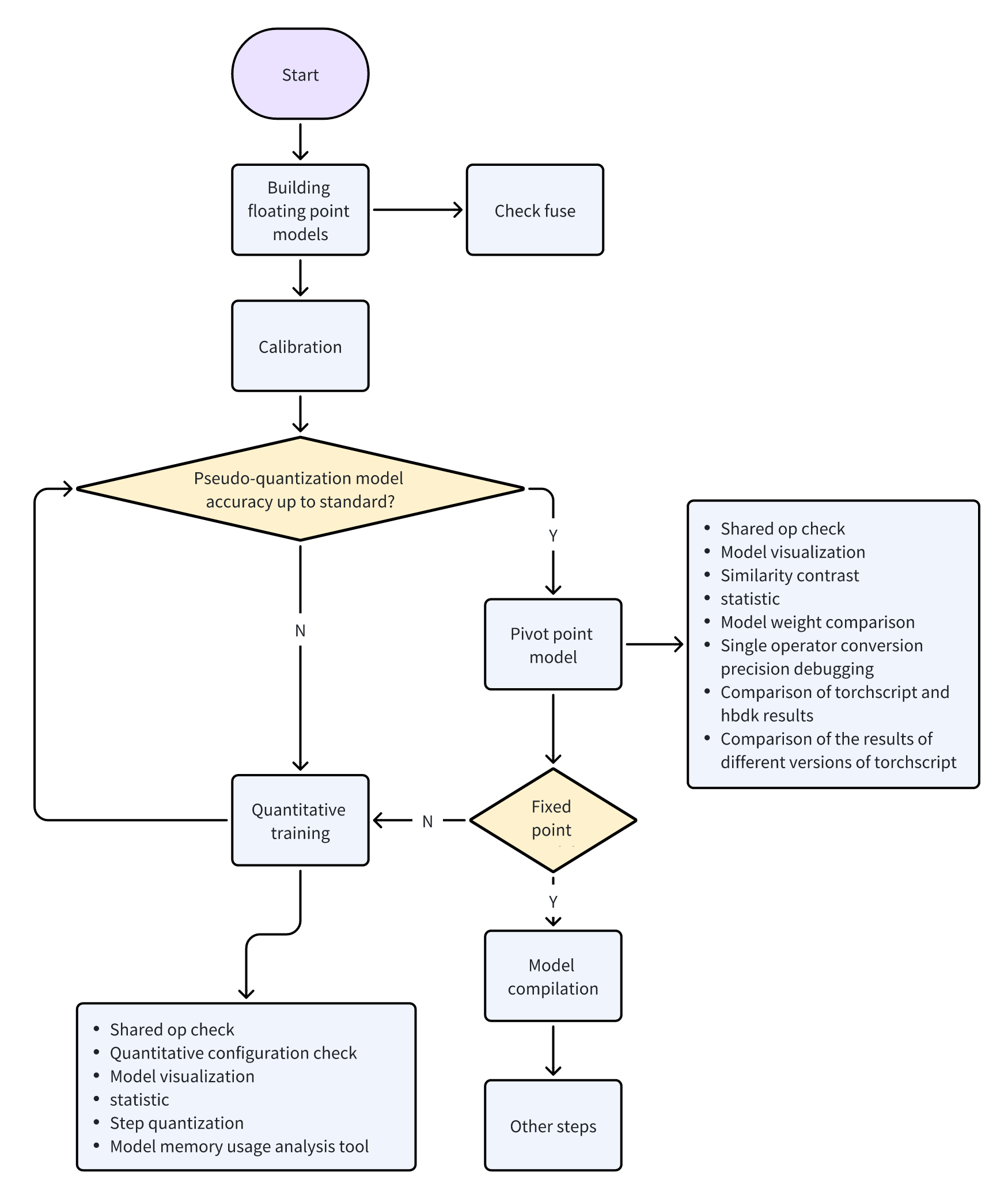

The overall process of Calibration and QAT is shown in the following figure:

The steps are described as follows:

-

Build and train a floating-point model. Refer to the Floating Point Model Acquisition section of the horizon_plugin_pytorch quick start chapter for more information.

-

Insert an Observer node into the floating-point model. Refer to the Calibration section of the horizon_plugin_pytorch quick start chapter for more information. Before converting the floating-point model using the

prepare_qat_fxmethod, the model needs to be set with aqconfig.model.qconfig = horizon.quantization.get_default_qconfig()get_default_qconfigcan set differentobserverforweightandactivation. Currently, calibration has optional observers including "min_max", "percentile", "mse", "kl", and "mix". If there are no special requirements, it is recommended to use the default "min_max" forweight_observerand "mse" foractivation_observer. Special usage and debugging techniques are described in the following common algorithm introduction.The

fake_quantparameter has no effect on the Calibration results, so it can be kept in the default state.def get_default_qconfig(

activation_fake_quant: Optional[str] = "fake_quant",

weight_fake_quant: Optional[str] = "fake_quant",

activation_observer: Optional[str] = "min_max",

weight_observer: Optional[str] = "min_max",

activation_qkwargs: Optional[Dict] = None,

weight_qkwargs: Optional[Dict] = None,

):- Set the

fake quantizestate toCALIBRATION.

horizon.quantization.set_fake_quantize(model, horizon.quantization.FakeQuantState.CALIBRATION)There are three states for

fake quantize, which need to be set to the corresponding state beforeQAT,calibration, andvalidation. In the calibration state, only the statistics of the inputs and outputs of each operator are observed. In the QAT state, besides observing the statistics, pseudo-quantization operations are also performed. And in the validation state, statistics are not observed, only pseudo-quantization operations are performed.class FakeQuantState(Enum):

QAT = "qat"

CALIBRATION = "calibration"

VALIDATION = "validation" - Set the

-

Calibration. Feed the prepared calibration data to the model, and observe the relevant statistics during the forward process by the observer.

-

Set the model state to

evaland set thefake quantizestate toVALIDATION.model.eval()

horizon.quantization.set_fake_quantize(model, horizon.quantization.FakeQuantState.VALIDATION) -

Validate the calibration effect. If the effect is satisfactory, the model can be directly converted to fixed point or quantization training can be performed based on it. If not satisfied, adjust the parameters in the

calibration qconfigto continue the calibration.

Introduction to Common Algorithms

For the parameter descriptions of each operator, please refer to the API documentation at the end of the document.

| Algorithm | Speed Ranking | Accuracy Ranking | Ease of Use Ranking |

|---|---|---|---|

| min_max | 1 | 5 | 1 |

| percentile | 2 | 4 | 4 |

| mse | 4 | 1 | 2 |

| kl | 5 | 2 | 3 |

| mix | 3 | 2 | 1 |

The table above shows the performance of several commonly used calibration methods, where smaller numbers indicate better performance. Speed represents the time consumed for calibrating the same data, accuracy represents the calibration effect of the method on most models, and ease of use represents the complexity of parameter tuning.

For the same model, different methods and parameters may have significant differences in accuracy/speed. Recent research also shows that there is no single method that can achieve the best accuracy on all models, and it is necessary to adjust the parameters accordingly. Therefore, it is recommended for users to try these calibration methods.

-

min_max. This method only calculates the sliding average of the maximum and minimum values, which is used to quickly determine common parameters such as Batch size and average_constant, without much technique involved.

-

percentile. This method has the highest upper limit of accuracy among all methods, but it is also the most difficult to tune. If the accuracy requirements can be met through other methods or the default parameters of this method, it is not recommended to spend too much time on parameter tuning. There are two adjustable parameters in percentile: bins and percentile. The more bins, the smaller the interval between candidate options for max, and the finer the granularity for adjustment, but it also means higher computational cost. It is recommended to determine the percentile first and then adjust the bins. Iterate between the two to narrow down the parameter range until satisfactory results are achieved. In most cases, taking 2048 as the bin provides sufficient adjustment granularity, and there is no need to separately adjust this parameter. The following is an example of the parameter tuning path for a model:

| Order | percentile | bins | accuracy |

|---|---|---|---|

| 1 | 99.99 | 2048 | 53.75 |

| 2 | 99.99 | 4096 | 54.38 |

| 3 | 99.995 | 4096 | 16.25 |

| 4 | 99.985 | 4096 | 32.67 |

| 5 | 99.9875 | 4096 | 57.06 |

| 6 | 99.9875 | 8192 | 62.84 |

| 7 | 99.98875 | 8192 | 57.62 |

| 8 | 99.988125 | 8192 | 63.15 |

In this example, it can be seen that after careful adjustment, the accuracy has improved by about 10%. There are significant differences between the inputs and outputs of different ops in the model. A set of global percentile parameters may be difficult to meet the requirements of all ops. When high accuracy is required, you can first find better global parameters using the method above, and then use debug tools to find the ops with large errors and individually set percentile parameters for these ops, following the setting method of qconfig. Here are several common data distributions that can cause significant errors:

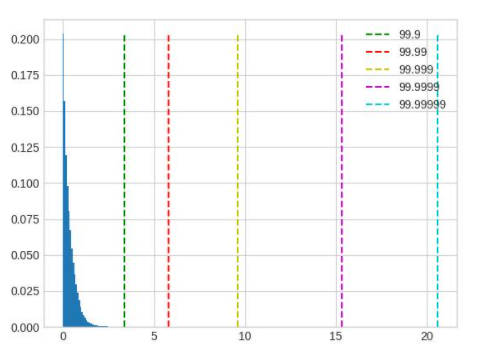

Long-tailed distribution, the value of percentile should be smaller. In the figure, 99.9 is a better value to choose.

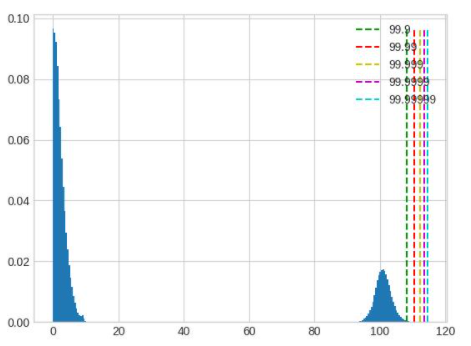

The value range is too large, and the distribution is not centralized. Whether to retain or ignore the tail will result in significant loss of accuracy. This situation should be avoided during training of floating-point models by adjusting parameters such as weight decay.

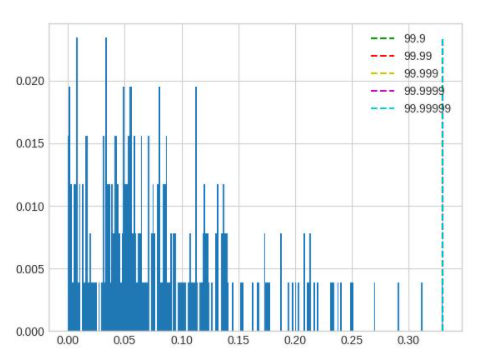

The output distribution of layernorm will show several highly concentrated areas. In this case, adjusting percentile according to the normal method will not have any impact on the quantization result. The adjustment range of percentile needs to be increased.

-

mse. The adjustable parameter is only the stride, with the default stride being 1. It will gradually try the 100th percentile of the maximum value and select the value corresponding to the percentile that minimizes the error (L2 distance) before and after quantization. This method is time-consuming for large models. Increasing the stride within a reasonable range can reduce the time consumption while ensuring accuracy. A too large stride can affect accuracy. Note that adjusting the parameters of this method can only optimize time consumption and cannot significantly improve accuracy.

-

kl. There are two adjustable parameters: bin and update_interval. Due to the long time consumption of this method, it is not recommended to adjust the default bin. The update_interval default is 1, increasing it can reduce time consumption, but it needs to ensure that update_interval is less than the total calibration step, otherwise it will not obtain normal quantization parameters.

-

mix. This method is mixed calibration. For each place that needs to be counted, different parameters of the percentile method will be attempted to select the method that minimizes the error (L2 distance) before and after quantization. It is highly automated and does not require adjustment of parameters.

Tuning techniques

-

The more calibration data, the better. However, due to the marginal effect, the improvement in accuracy will be very limited when the data volume becomes large. If the training set is small, all data can be used for calibration. If the training set is large, a suitable subset can be selected based on the time consumption of calibration. It is recommended to perform calibration for at least 10 - 100 steps.

-

Data augmentation such as horizontal flipping is recommended, but mosaic-like augmentation should be avoided. Calibration should be performed using pre-processing from the inference stage plus training data as much as possible.

-

The batch size should be as large as possible. If there is significant noise in the data or a large number of outliers in the model, it can be appropriately reduced. This parameter should be determined when trying the min max method.

-

average_constant indicates the influence of each step on the maximum and minimum values. The smaller the average_constant, the smaller the influence of the current step, and the greater the influence of the history sliding average. This parameter needs to be adjusted between 0.01 and 0.5 depending on the data volume. When the data volume is sufficient (step > 100), average_constant is set to 0.01. When the data volume is insufficient, average_constant can be increased appropriately. In extreme cases where there are only 2 steps of data, average_constant is set to 0.5. This parameter should be determined when trying the min max method and then used in other methods.

-

When the accuracy of the calibration model is good, fixing the quantization parameters of the feature map and performing QAT training can achieve better results. When the accuracy is poor, the fixed calibration quantization parameters should not be used. There is no clear standard for whether the accuracy is good or bad, and it needs to be tried. For example, if the accuracy of a certain model is 100, if the calibration accuracy is 50, then the accuracy is definitely not good. But if the calibration accuracy is 95, then whether this accuracy can reach the level of fixed feature map quantization parameters needs to be tried. The usual practice is to experiment with both fixed and unfixed quantization parameters for comparison.

-

It is recommended to try the min max method first, as it is the fastest. Use it to go through the calibration process, adjust and determine the batch size and average_constant parameters, and then try the percentile, kl, mse, and mix methods separately, selecting the method with the best performance.

Observer Parameters Documentation

class horizon_plugin_pytorch.quantization.observer_v2.KLObserver(bins: int = 512, update_interval: int = 1, averaging_constant: float = 0.01, ch_axis: int = - 1, dtype: Union[torch.dtype, horizon_plugin_pytorch.dtype.QuantDType] = 'qint8', qscheme: torch.qscheme = torch.per_tensor_symmetric, quant_min: int = None, quant_max: int = None, is_sync_quantize: bool = False, factory_kwargs: Dict = None)

KL observer. KL observer based on histogram. Histogram is calculated online and won’t be saved.

Parameters

-

bins - Number of histograms bins.

-

update_interval - Interval of computing KL entropy and update min/max. KLObserver will constantly collect histograms of activations, but only perform KL calculation when update_interval is satisfied. if it is set to 1, KL entropy will be computed every forward step. Larger interval guarantees less time and does no harm to calibration accuracy. Set it to the total calibration steps can achieve best performance. update_interval must be no greater than total calibration steps, otherwise no min/max will be computed.

-

averaging_constant - Averaging constant for min/max.

-

ch_axis - Channel axis.

-

dtype - Quantized data type.

-

qscheme - Quantization scheme to be used.

-

quant_min - Min quantization value. Will follow dtype if unspecified.

-

quant_max - Max quantization value. Will follow dtype if unspecified.

-

is_sync_quantize - If sync statistics when training with multiple devices.

-

factory_kwargs - kwargs which are passed to factory functions for min_val and max_val.

forward(x_orig)

Defines the computation performed at every call.

Should be overridden by all subclasses.

Although the recipe for forward pass needs to be defined within this function, one should call the Module instance afterwards instead of this since the former takes care of running the registered hooks while the latter silently ignores them.

class horizon_plugin_pytorch.quantization.observer_v2.MSEObserver(stride: int = 1, averaging_constant: float = 0.01, ch_axis: int = - 1, dtype: Union[torch.dtype, horizon_plugin_pytorch.dtype.QuantDType] = 'qint8', qscheme: torch.qscheme = torch.per_tensor_symmetric, quant_min: int = None, quant_max: int = None, is_sync_quantize: bool = False, factory_kwargs: Dict = None)

MSE observer.

Observer module for computing the quantization parameters based on the Mean Square Error (MSE) between the original tensor and the quantized one.

This observer linear searches the quantization scales that minimize MSE.

Parameters

-

stride – Searching stride. Larger value gives smaller search space, which means less computing time but possibly poorer accuracy. Default is 1. Suggests no greater than 20.

-

averaging_constant – Averaging constant for min/max.

-

ch_axis – Channel axis.

-

dtype – Quantized data type.

-

qscheme – Quantization scheme to be used.

-

quant_min – Min quantization value. Will follow dtype if unspecified.

-

quant_max – Max quantization value. Will follow dtype if unspecified.

-

is_sync_quantize – If sync statistics when training with multiple devices.

-

factory_kwargs – kwargs which are passed to factory functions for min_val and max_val.

forward(x_orig)

Defines the computation performed at every call.

Should be overridden by all subclasses.

Although the recipe for forward pass needs to be defined within this function, one should call the Module instance afterwards instead of this since the former takes care of running the registered hooks while the latter silently ignores them.

class horizon_plugin_pytorch.quantization.observer_v2.MinMaxObserver(averaging_constant: float = 0.01, ch_axis: int = - 1, dtype: Union[torch.dtype, horizon_plugin_pytorch.dtype.QuantDType] = 'qint8', qscheme: torch.qscheme = torch.per_tensor_symmetric, quant_min: int = None, quant_max: int = None, is_sync_quantize: bool = False, factory_kwargs: Dict = None)

Min max observer.

This observer computes the quantization parameters based on minimums and maximums of the incoming tensors. The module records the moving average minimum and maximum of incoming tensors, and uses this statistic to compute the quantization parameters.

Parameters- averaging_constant – Averaging constant for min/max.

- ch_axis – Channel axis.

- dtype – Quantized data type.

- qscheme – Quantization scheme to be used.

- quant_min – Min quantization value. Will follow dtype if unspecified.

- quant_max – Max quantization value. Will follow dtype if unspecified.

- is_sync_quantize – If sync statistics when training with multiple devices.

- factory_kwargs – kwargs which are passed to factory functions for min_val and max_val.

forward(x_orig)

Record the running minimum and maximum of x.

class horizon_plugin_pytorch.quantization.observer_v2.MixObserver(averaging_constant: float = 0.01, ch_axis: int = - 1, dtype: Union[torch.dtype, horizon_plugin_pytorch.dtype.QuantDType] = 'qint8', qscheme: torch.qscheme = torch.per_tensor_symmetric, quant_min: int = None, quant_max: int = None, is_sync_quantize: bool = False, factory_kwargs: Dict = None)

Mix observer.

This observer computes the quantization parameters based on multiple calibration methods and selects the quantization parameters with the smallest quantization error.

Parameters

-

averaging_constant – Averaging constant for min/max.

-

ch_axis – Channel axis.

-

dtype – Quantized data type.

-

qscheme – Quantization scheme to be used.

-

quant_min – Min quantization value. Will follow dtype if unspecified.

-

quant_max – Max quantization value. Will follow dtype if unspecified.

-

is_sync_quantize – If sync statistics when training with multiple devices.

-

factory_kwargs – kwargs which are passed to factory functions for min_val and max_val.

forward(x_orig)

Defines the computation performed at every call.

Should be overridden by all subclasses.

Although the recipe for forward pass needs to be defined within this function, one should call the Module instance afterwards instead of this since the former takes care of running the registered hooks while the latter silently ignores them.

class horizon_plugin_pytorch.quantization.observer_v2.PercentileObserver(percentile: float = 99.99, bins: int = 2048, averaging_constant: float = 0.01, ch_axis: int = - 1, dtype: Union[torch.dtype, horizon_plugin_pytorch.dtype.QuantDType] = 'qint8', qscheme: torch.qscheme = torch.per_tensor_symmetric, quant_min: int = None, quant_max: int = None, is_sync_quantize: bool = False, factory_kwargs: Dict = None)

Percentile observer.

Percentile observer based on histogram. Histogram is calculated online and won’t be saved. The minimum and maximum are moving averaged to compute the quantization parameters.

Parameters

-

percentile – Index percentile of histrogram

-

bins – Number of histograms bins.

-

averaging_constant – Averaging constant for min/max.

-

ch_axis – Channel axis.

-

dtype – Quantized data type.

-

qscheme – Quantization scheme to be used.

-

quant_min – Min quantization value. Will follow dtype if unspecified.

-

quant_max – Max quantization value. Will follow dtype if unspecified.

-

is_sync_quantize – If sync statistics when training with multiple devices.

-

factory_kwargs – kwargs which are passed to factory functions for min_val and max_val.

forward(x_orig)

Defines the computation performed at every call.

Should be overridden by all subclasses.

Although the recipe for forward pass needs to be defined within this function, one should call the Module instance afterwards instead of this since the former takes care of running the registered hooks while the latter silently ignores them.

class horizon_plugin_pytorch.quantization.MovingAverageMinMaxObserver(averaging_constant=0.01, dtype=torch.qint8, qscheme=torch.per_tensor_symmetric, quant_min=None, quant_max=None, is_sync_quantize=False, factory_kwargs=None)

MovingAverageMinMax Observer.

Observer module for computing the quantization parameters based on the moving average of the min and max values.

This observer computes the quantization parameters based on the moving averages of minimums and maximums of the incoming tensors. The module records the average minimum and maximum of incoming tensors, and uses this statistic to compute the quantization parameters.

Parameters

-

averaging_constant – Averaging constant for min/max.

-

dtype – Quantized data type

-

qscheme – Quantization scheme to be used, only support per_tensor_symmetric scheme

-

reduce_range – Reduces the range of the quantized data type by 1 bit

-

quant_min – Minimum quantization value.

-

quant_max – Maximum quantization value.

-

is_sync_quantize – Whether use sync quantize

-

factory_kwargs – Arguments for register data buffer

forward(x_orig)

Record the running minimum and maximum of x.

class horizon_plugin_pytorch.quantization.MovingAveragePerChannelMinMaxObserver(averaging_constant=0.01, ch_axis=0, dtype=torch.qint8, qscheme=torch.per_channel_symmetric, quant_min=None, quant_max=None, is_sync_quantize=False, factory_kwargs=None)

MovingAveragePerChannelMinMax Observer.

Observer module for computing the quantization parameters based on the running per channel min and max values.

This observer uses the tensor min/max statistics to compute the per channel quantization parameters. The module records the running minimum and maximum of incoming tensors, and uses this statistic to compute the quantization parameters.

Parameters

-

averaging_constant – Averaging constant for min/max.

-

ch_axis – Channel axis

-

dtype – Quantized data type

-

qscheme – Quantization scheme to be used, Only support per_channel_symmetric

-

quant_min – Minimum quantization value.

-

quant_max – Maximum quantization value.

-

is_sync_quantize – whether use sync quantize

-

factory_kwargs – Arguments for register data buffer

forward(x_orig)

Defines the computation performed at every call.

Should be overridden by all subclasses.

Although the recipe for forward pass needs to be defined within this function, one should call the Module instance afterwards instead of this since the former takes care of running the registered hooks while the latter silently ignores them.

Quantization Training Guide

Quantization training involves inserting some fake quantization nodes into the model to minimize precision loss when converting the quantization-trained model into a fixed-point model. Quantization training is similar to traditional model training, where developers can start from scratch and build a pseudo-quantized model, and then train that pseudo-quantized model. Due to various limitations of the deployed hardware platforms, it is relatively difficult for developers to understand these limitations and build pseudo-quantized models accordingly. The quantization training tool automatically inserts pseudo-quantization operators based on the limitations of the deployment platform into the provided floating-point model, reducing the threshold for developers to develop quantized models.

Quantization training is generally more challenging than training pure floating-point models due to the various restrictions imposed. The goal of the quantization training tool is to reduce the difficulty of quantization training and the engineering complexity of deploying quantized models.

Process and Examples

Although the quantization training tool does not require users to start from a pre-trained floating-point model, experience has shown that starting quantization training from a pre-trained high-precision floating-point model can greatly reduce the difficulty of quantization training.

from horizon_plugin_pytorch.quantization import get_default_qconfig

# Convert the model to QAT state

default_qat_8bit_fake_quant_qconfig = get_default_qconfig(

activation_fake_quant="fake_quant",

weight_fake_quant="fake_quant",

activation_observer="min_max",

weight_observer="min_max",

activation_qkwargs=None,

weight_qkwargs={

"qscheme": torch.per_channel_symmetric,

"ch_axis": 0,

},

)

default_qat_out_8bit_fake_quant_qconfig = get_default_qconfig(

activation_fake_quant=None,

weight_fake_quant="fake_quant",

activation_observer=None,

weight_observer="min_max",

activation_qkwargs=None,

weight_qkwargs={

"qscheme": torch.per_channel_symmetric,

"ch_axis": 0,

},

)

qat_model = prepare_qat_fx(

float_model,

{

"": default_qat_8bit_fake_quant_qconfig,

"module_name": {

"classifier": default_qat_out_8bit_fake_quant_qconfig,

},

},

).to(device)

# Load the quantization parameters from the Calibration model

qat_model.load_state_dict(calib_model.state_dict())

# Perform quantization-aware training

# As a fine-tuning process, quantization-aware training generally requires a smaller learning rate

optimizer = torch.optim.SGD(

qat_model.parameters(), lr=0.0001, weight_decay=2e-4

)

for nepoch in range(epoch_num):

# Note the training state control method for QAT model here

qat_model.train()

set_fake_quantize(qat_model, FakeQuantState.QAT)

train_one_epoch(

qat_model,

nn.CrossEntropyLoss(),optimizer,

None,

train_data_loader,

device,

)

# Note the control method for QAT model eval state here

qat_model.eval()

set_fake_quantize(qat_model, FakeQuantState.VALIDATION)

# Test accuracy of qat model

top1, top5 = evaluate(

qat_model,

eval_data_loader,

device,

)

print(

"QAT model: evaluation Acc@1 {:.3f} Acc@5 {:.3f}".format(

top1.avg, top5.avg

)

)

# Test accuracy of quantized model

quantized_model = convert_fx(qat_model.eval()).to(device)

top1, top5 = evaluate(

quantized_model,

eval_data_loader,

device,

)

print(

"Quantized model: evaluation Acc@1 {:.3f} Acc@5 {:.3f}".format(

top1.avg, top5.avg

)

)

Due to limitations of the underlying platform, QAT models cannot fully represent the accuracy of the final deployed model. It is important to monitor the accuracy of the quantized model to ensure that it is performing as expected, otherwise there may be issues with the deployed model.

From the above example code, it can be seen that quantization-aware training has two additional steps compared to traditional pure floating-point model training:

- prepare_qat_fx

- Load calibration model parameters

prepare_qat_fx

The objective of this step is to transform the floating-point network by inserting quantization nodes.

Loading Calibration Model Parameters

By loading the pseudo quantization parameters obtained from Calibration, a better initialization can be achieved.

Training Iterations

At this point, the construction of the pseudo quantization model and the initialization of the parameters have been completed. Now, regular training iterations and model parameter updates can be performed, while monitoring the accuracy of the quantized model.

Pseudo Quantization Operator

The main difference between quantization training and traditional floating-point model training lies in the insertion of pseudo quantization operators. Different quantization training algorithms are also represented through pseudo quantization operators. Therefore, let's introduce the pseudo quantization operator here.

Since BPU only supports symmetric quantization, we will take symmetric quantization as an example here.

Pseudo Quantization Process

Taking int8 quantization training as an example, the calculation process of the pseudo quantization operator is generally as follows:

fake_quant_x = clip(round(x / scale), -128, 127) * scale

Like Conv2d, which optimizes weight and bias parameters through training, the pseudo quantization operator needs to optimize the scale parameter through training. However, due to the fact that round acts as a step function and its gradient is 0, the pseudo quantization operator cannot be directly trained through gradient backpropagation. To solve this problem, there are usually two solutions: statistical methods and "learning"-based methods.

Statistical Methods

The goal of quantization is to uniformly map floating-point numbers in the Tensor to the range [-128, 127] represented by int8 using the scale parameter. Since it is a uniform mapping, it is easy to calculate the scale:

def compute_scale(x: Tensor):

xmin, xmax = x.max(), maxv = x.min()

return max(xmin.abs(), xmax.abs()) / 256.0

Due to the non-uniform distribution of data in the Tensor and the out-of-range problem, different methods for computing xmin and xmax have emerged. You can refer to MovingAverageMinMaxObserver and other methods.

Please refer to default_qat_8bit_fake_quant_qconfig and related interfaces for usage in the tool.

"Learning"-Based Methods

Although the gradient of round is 0, researchers have found through experiments that setting its gradient to 1 can also make the model converge to the expected accuracy in this scenario.

def round_ste(x: Tensor):

return (x.round() - x).detach() + x

Please refer to default_qat_8bit_lsq_quant_qconfig and its related interfaces for instructions on how to use the tool.

Users who are interested in further understanding can refer to the following paper: Learned Step Size Quantization

Heterogeneous Model Guide

Introduction to Heterogeneous Models

A heterogeneous model is a model where part of it runs on the BPU and the other part runs on the CPU during deployment, as opposed to a homogeneous model which runs entirely on the BPU. Typically, the following two types of models become heterogeneous models during deployment:

-

Models that contain operators not supported by the BPU.

-

Models where certain operators are specified to run on the CPU due to large quantization error.

Workflow

By using 'prepare', the floating-point model is converted into a QAT model, which is then trained and exported as an ONNX format model, and finally converted into a binary model using the hb_mapper tool.

Users can obtain heterogeneous fixed-point models through the conversion process for model accuracy evaluation.

Operator Limitations

Since the heterogeneous model is integrated with horizon_nn, its operator support is the same as that of horizon_nn.

Main Interface Parameter Description

horizon_plugin_pytorch.quantization.prepare_qat_fx

- Set

hybrid=Trueto enable the heterogeneous model functionality. - Users can specify certain BPU-supported operators to run on the CPU by setting the

hybrid_dictparameter.

def prepare_qat_fx(

model: Union[torch.nn.Module, GraphModule],

qconfig_dict: Dict[str, Any] = None,

prepare_custom_config_dict: Dict[str, Any] = None,

optimize_graph: bool = False,

hybrid: bool = False,

hybrid_dict: Dict[str, List] = None,

) -> ObservedGraphModule:

"""Prepare QAT Model

`model`: torch.nn.Module or GraphModule (model after fuse_fx)

`qconfig_dict`: Define Qconfig. If eager mode within module is used in addition to qconfig_dict, the qconfig defined within the module takes priority. The configuration format of qconfig_dict is as follows:

qconfig_dict = {

# Optional, global configuration

"": qconfig,

# Optional, configure by module type

"module_type": [(torch.nn.Conv2d, qconfig), ...],

# Optional, configure by module name

"module_name": [("foo.bar", qconfig),...],

# Priority: global < module_type < module_name < module.qconfig

# The qconfig for non-module types of operators defaults to be consistent with the qconfig of their parent module.

# If you need to set it separately, please encapsulate this part into a separate module.

`prepare_custom_config_dict`: Custom configuration dictionary

prepare_custom_config_dict = {

# Currently only preserved_attributes is supported. Generally, all properties will be automatically preserved.

# This option is just in case, and is rarely used.

"preserved_attributes": ["preserved_attr"],

}

`optimize_graph`: Keep the input and output scale of "cat" consistent. Currently only effective in the Bernoulli architecture.

`hybrid`: Whether to use hybrid mode. Hybrid mode must be enabled in the following cases:

1. The model contains operators not supported by BPU, or the user wants to specify some BPU operators to fall back to CPU.

2. The user wants to quantize the QAT model with horizon_nn.

`hybrid_dict`: Define the user-specified CPU operator.

hybrid_dict = {

# Optional, configure by module type

"module_type": [torch.nn.Conv2d, ...],

# Optional, configure by module name

"module_name": ["foo.bar", ...],

# Priority: module_type < module_name

# Similar to qconfig_dict, if you want non-module types of operators to run on CPU, you need to encapsulate this part into a separate module.

}

"""

horizon_plugin_pytorch.utils.onnx_helper.export_to_onnx

Export the onnx model to integrate with hb_mapper.

This interface also supports non-hybrid models, and the exported ONNX format model is only used for visualization.

def export_to_onnx(

model,

args,

f,

export_params=True,

verbose=False,

training=TrainingMode.EVAL,

input_names=None,

output_names=None,

operator_export_type=OperatorExportTypes.ONNX_FALLTHROUGH,

opset_version=11,

do_constant_folding=True,

example_outputs=None,

strip_doc_string=True,

dynamic_axes=None,

keep_initializers_as_inputs=None,

custom_opsets=None,

enable_onnx_checker=False,

):

"""This interface is basically the same as torch.onnx.export, hiding the parameters that do not need modification. The parameters that need attention are:

`model`: The model to be exported

`args`: Model input for tracing the model

`f`: Filename or file descriptor for saving the onnx file

`operator_export_type`: Operator export type

1. For non-heterogeneous models, onnx is only used for visualization and does not need to be guaranteed to be actually available. The default value is OperatorExportTypes.ONNX_FALLTHROUGH.

2. For heterogeneous models, onnx needs to be guaranteed to be actually available, and None is used to ensure that the exported operator is a standard onnx operator.

`opset_version`: Can only be 11. horizon_plugin_pytorch has registered specific mapping rules in opset 11.

Note: If you use the public torch.onnx.export, make sure the above parameters are set correctly,

and import horizon_plugin_pytorch.utils._register_onnx_ops to register specific mapping rules in opset 11.

"""

horizon_plugin_pytorch.quantization.convert_fx

You can reuse convert_fx to convert the quantized fake quantization model into a heterogeneous quantization model for model accuracy evaluation.

Heterogeneous quantization models obtained through convert_fx cannot be deployed. They are currently only used for model accuracy evaluation.

def convert_fx(

graph_module: GraphModule,

convert_custom_config_dict: Dict[str, Any] = None,

_remove_qconfig: bool = True,

) -> QuantizedGraphModule:

"""Convert the QAT model, only used for evaluating the fixed-point model.

`graph_module`: The model after prepare->(calibration)->train

`convert_custom_config_dict`: Custom configuration dictionary

convert_custom_config_dict = {

# Only support preserved_attributes for now. Generally, all attributes will be automatically preserved, and this option is rarely used.

"preserved_attributes": ["preserved_attr"],

}

`_remove_qconfig`: Whether to remove qconfig after conversion, which is generally not used.

"""

Process and Example1. Transform floating-point model.

-

Insert

QuantStubandDeQuantStubto keep consistent usage with non-heterogeneous mode.-

If the first op is

cpu op, then there is no need to insertQuantStub. -

If the last op is

cpu op, thenDeQuantStubcan be omitted.

-

-

For non-

moduleoperations, if separateqconfigsettings or specifying running on CPU is required, they need to be encapsulated into amodule. Please refer to_SeluModulein the example for details.

-

Set

march. Setbernoulli2for RDK X3 and setbayesfor RDK Ultra. -

Set

qconfig. Retain the configuration method of settingqconfigwithinmodulein non-heterogeneous mode. In addition to this,qconfigcan also be passed through theqconfig_dictparameter of theprepare_qat_fxinterface. For detailed usage, please refer to the interface parameter description.-

For

BPU op, it is necessary to ensure that there is aqconfig. If its input op is notQuantStub, then the input op also needs to have anactivation qconfig. -

For

CPU op,qconfigwill not have any impact on it, but if it is followed by aBPU op, aqconfigmust be specified. -

Recommended setting method: first set the global

qconfigtohorizon.quantization.default_qat_8bit_fake_quant_qconfig(orhorizon.quantization.default_calib_8bit_fake_quant_qconfig, depending on calibration or qat stage), and then modify it according to the requirements. Generally, onlyqconfigforint16and high-precision output ops needs to be set separately.

-

Currently, only the RDK Ultra with the BPU architecture set to BAYES supports setting the int16 quantization.

-

Set

hybrid_dict. Optional, for detailed usage, please refer to the interface parameter description. If there are no explicitly specified CPU ops,hybrid_dictdoes not need to be set. -

Call

prepare_qat_fxand performcalibration. Refer to the Calibration section in the horizon_plugin_pytorch development guide. -

Call

prepare_qat_fx, load thecalibrationmodel, and perform QAT training. Refer to the Quantization Training section in the horizon_plugin_pytorch development guide. -

Call

convert_fx. Optional, can be skipped if there is no need to evaluate the precision of the fixed-point model. -

Call

export_to_onnx.torch.onnx.exportcan also be used, but the precautions in theexport_to_onnxinterface description must be followed. -

Use

hb_mapperto transform the onnx model. After transformation, check whether the operators are running on the expected device. In some cases,hb_mapperstill needs to set therun_on_cpuparameter. For example: althoughconvis not quantized in the QAT stage,hb_mapperwill still default to quantizing it because its input (output of the previous operator) goes through pseudo quantization.

import copy

import numpy as np

import torch

from horizon_plugin_pytorch.march import March, set_march

from horizon_plugin_pytorch.nn import qat

from horizon_plugin_pytorch.quantization import (

prepare_qat_fx,

convert_fx,set_fake_quantize,

FakeQuantState,

load_observer_params,

)

from horizon_plugin_pytorch.quantization.qconfig import (

default_calib_8bit_fake_quant_qconfig,

default_calib_out_8bit_fake_quant_qconfig,

default_qat_8bit_fake_quant_qconfig,

default_qat_out_8bit_fake_quant_qconfig,

)

from torch import nn

from torch.quantization import DeQuantStub, QuantStub

from horizon_plugin_pytorch.utils.onnx_helper import export_to_onnx

class _ConvBlock(nn.Module):

def __init__(self, channels=3):

super().__init__()

self.conv = nn.Conv2d(channels, channels, 1)

self.prelu = torch.nn.PReLU()

def forward(self, input):

x = self.conv(input)

x = self.prelu(x)

return torch.nn.functional.selu(x)

# Wrap functional selu into a module for separate setting

class _SeluModule(nn.Module):

def forward(self, input):

return torch.nn.functional.selu(input)

class HybridModel(nn.Module):

def __init__(self, channels=3):

super().__init__()

# Insert QuantStub

self.quant = QuantStub()

self.conv0 = nn.Conv2d(channels, channels, 1)

self.prelu = torch.nn.PReLU()

self.conv1 = _ConvBlock(channels)

self.conv2 = nn.Conv2d(channels, channels, 1)

self.conv3 = nn.Conv2d(channels, channels, 1)

self.conv4 = nn.Conv2d(channels, channels, 1)

self.selu = _SeluModule()

# Insert DequantStub

self.dequant = DeQuantStub()

self.identity = torch.nn.Identity()

def forward(self, input):

x = self.quant(input)

x = self.conv0(x)

x = self.identity(x)x = self.prelu(x)

x = torch.nn.functional.selu(x)

x = self.conv1(x)

x = self.conv2(x)

x = self.conv3(x)

x = self.identity(x)

x = self.conv4(x)

x = self.selu(x)

return self.dequant(x)

# Set march **RDK X3** to BERNOULLI2, and set **RDK Ultra** to BAYES.

set_march(March.BAYES)

data_shape = [1, 3, 224, 224]

data = torch.rand(size=data_shape)

model = HybridModel()

qat_model = copy.deepcopy(model)

# Do not perform inference on the float model after prepare_qat_fx, as prepare_qat_fx modifies the float model in-place.

float_res = model(data)

calibration_model = prepare_qat_fx(

model,

{

"": default_calib_8bit_fake_quant_qconfig,

# selu is a CPU operator, and conv4 is the output of the BPU model, set as high-precision output.

"module_name": [("conv4", default_calib_out_8bit_fake_quant_qconfig)]

},

hybrid=True,

hybrid_dict={

"module_name": ["conv1.conv", "conv3"],

"module_type": [_SeluModule],

},

)

# Ensure the original model does not change during the calibration phase.

calibration_model.eval()

set_fake_quantize(calibration_model, FakeQuantState.CALIBRATION)

for i in range(5):

calibration_model(torch.rand(size=data_shape))

qat_model = prepare_qat_fx(

qat_model,

{

"": default_qat_8bit_fake_quant_qconfig,

# selu is a CPU operator, and conv4 is the output of the BPU model, set as high-precision output.

"module_name": [("conv4", default_qat_out_8bit_fake_quant_qconfig)]

},

hybrid=True,

hybrid_dict={

"module_name": ["conv1.conv", "conv3"],

"module_type": [_SeluModule],

},

)

load_observer_params(calibration_model, qat_model)

set_fake_quantize(calibration_model, FakeQuantState.QAT)

# qat training start

# ......

# qat training end

# Export qat.onnx

export_to_onnx(

qat_model,

data,

"qat.onnx",

operator_export_type=None,

)

# Evaluate quantized model

quantize_model = convert_fx(qat_model)

quantize_res = quantize_model(data)

Print the results of the QAT model.

HybridModel(

(quant): QuantStub(

(activation_post_process): FakeQuantize(

fake_quant_enabled=tensor([1], dtype=torch.uint8), observer_enabled=tensor([1], dtype=torch.uint8), quant_min=-128, quant_max=127, dtype=qint8, qscheme=torch.per_tensor_symmetric, ch_axis=-1, scale=tensor([0.0078]), zero_point=tensor([0])

(activation_post_process): MovingAverageMinMaxObserver(min_val=tensor([-0.9995]), max_val=tensor([0.9995]))

)

)

(conv0): Conv2d(

3, 3, kernel_size=(1, 1), stride=(1, 1)

(weight_fake_quant): FakeQuantize(

fake_quant_enabled=tensor([1], dtype=torch.uint8), observer_enabled=tensor([1], dtype=torch.uint8), quant_min=-128, quant_max=127, dtype=qint8, qscheme=torch.per_channel_symmetric, ch_axis=0, scale=tensor([0.0038, 0.0041, 0.0016]), zero_point=tensor([0, 0, 0])

(activation_post_process): MovingAveragePerChannelMinMaxObserver(min_val=tensor([-0.4881, -0.4944, 0.0787]), max_val=tensor([-0.1213, 0.5284, 0.1981]))

)

(activation_post_process): FakeQuantize(

fake_quant_enabled=tensor([1], dtype=torch.uint8), observer_enabled=tensor([1], dtype=torch.uint8), quant_min=-128, quant_max=127, dtype=qint8, qscheme=torch.per_tensor_symmetric, ch_axis=-1, scale=tensor([0.0064]), zero_point=tensor([0])

(activation_post_process): MovingAverageMinMaxObserver(min_val=tensor([-0.8159]), max_val=tensor([0.8159]))

)

)

(prelu): PReLU(num_parameters=1)

(conv1): _ConvBlock(

(conv): Conv2d(3, 3, kernel_size=(1, 1), stride=(1, 1))

(prelu): PReLU(num_parameters=1)

)

(conv2): Conv2d(3, 3, kernel_size=(1, 1), stride=(1, 1)

(weight_fake_quant): FakeQuantize(

fake_quant_enabled=tensor([1], dtype=torch.uint8), observer_enabled=tensor([1], dtype=torch.uint8), quant_min=-128, quant_max=127, dtype=qint8, qscheme=torch.per_channel_symmetric, ch_axis=0, scale=tensor([0.0040, 0.0044, 0.0040]), zero_point=tensor([0, 0, 0])

(activation_post_process): MovingAveragePerChannelMinMaxObserver(

min_val=tensor([-0.5044, -0.4553, -0.5157]), max_val=tensor([0.1172, 0.5595, 0.4104])

)

)

(activation_post_process): FakeQuantize(

fake_quant_enabled=tensor([1], dtype=torch.uint8), observer_enabled=tensor([1], dtype=torch.uint8), quant_min=-128, quant_max=127, dtype=qint8, qscheme=torch.per_tensor_symmetric, ch_axis=-1, scale=tensor([0.0059]), zero_point=tensor([0])

(activation_post_process): MovingAverageMinMaxObserver(

min_val=tensor([-0.7511]), max_val=tensor([0.7511])

)

)

(conv3): Conv2d(3, 3, kernel_size=(1, 1), stride=(1, 1))

(conv4): Conv2d(

3, 3, kernel_size=(1, 1), stride=(1, 1)

(weight_fake_quant): FakeQuantize(

fake_quant_enabled=tensor([1], dtype=torch.uint8), observer_enabled=tensor([1], dtype=torch.uint8), quant_min=-128, quant_max=127, dtype=qint8, qscheme=torch.per_channel_symmetric, ch_axis=0, scale=tensor([0.0025, 0.0037, 0.0029]), zero_point=tensor([0, 0, 0])

(activation_post_process): MovingAveragePerChannelMinMaxObserver(

min_val=tensor([-0.2484, -0.4718, -0.3689]), max_val=tensor([0.3239, -0.0056, 0.3312])

)

)

(activation_post_process): None

)

(selu): _SeluModule()

(dequant): DeQuantStub()

(identity): Identity()

(prelu_input_dequant): DeQuantStub()

(selu_1_activation_post_process): _WrappedCalibFakeQuantize(

(activation_post_process): FakeQuantize(

fake_quant_enabled=tensor([1], dtype=torch.uint8), observer_enabled=tensor([1], dtype=torch.uint8), quant_min=-128, quant_max=127, dtype=qint8, qscheme=torch.per_tensor_symmetric, ch_axis=-1, scale=tensor([0.0042]), zero_point=tensor([0])

(activation_post_process): MovingAverageMinMaxObserver(

min_val=tensor([-0.5301]), max_val=tensor([0.5301])

)

)

)

(conv3_activation_post_process): _WrappedCalibFakeQuantize(

(activation_post_process): FakeQuantize(

fake_quant_enabled=tensor([1], dtype=torch.uint8), observer_enabled=tensor([1], dtype=torch.uint8), quant_min=-128, quant_max=127, dtype=qint8, qscheme=torch.per_tensor_symmetric, ch_axis=-1, scale=tensor([0.0072]), zero_point=tensor([0])

(activation_post_process): MovingAverageMinMaxObserver(

min_val=tensor([-0.9156]), max_val=tensor([0.9156])

)

)

)

(conv3_input_dequant): DeQuantStub()

(selu_2_input_dequant): DeQuantStub()

)

def forward(self, input):

input_1 = input

quant = self.quant(input_1); input_1 = None

conv0 = self.conv0(quant); quant = None

identity = self.identity(conv0); conv0 = None

prelu_input_dequant_0 = self.prelu_input_dequant(identity); identity = None

prelu = self.prelu(prelu_input_dequant_0); prelu_input_dequant_0 = None

selu = torch.nn.functional.selu(prelu, inplace = False); prelu = None

conv1_conv = self.conv1.conv(selu); selu = None

conv1_prelu = self.conv1.prelu(conv1_conv); conv1_conv = None

selu_1 = torch.nn.functional.selu(conv1_prelu, inplace = False); conv1_prelu = None

selu_1_activation_post_process = self.selu_1_activation_post_process(selu_1); selu_1 = None

conv2 = self.conv2(selu_1_activation_post_process); selu_1_activation_post_process = None

conv3_input_dequant_0 = self.conv3_input_dequant(conv2); conv2 = None

conv3 = self.conv3(conv3_input_dequant_0); conv3_input_dequant_0 = None

conv3_activation_post_process = self.conv3_activation_post_process(conv3); conv3 = None

identity_1 = self.identity(conv3_activation_post_process); conv3_activation_post_process = None

conv4 = self.conv4(identity_1); identity_1 = None

selu_2_input_dequant_0 = self.selu_2_input_dequant(conv4); conv4 = None

selu_2 = torch.nn.functional.selu(selu_2_input_dequant_0, inplace = False); selu_2_input_dequant_0 = None

dequant = self.dequant(selu_2); selu_2 = None

return dequant

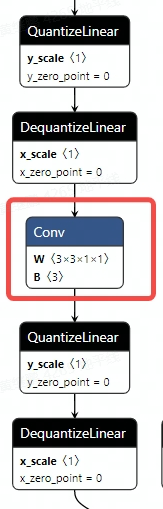

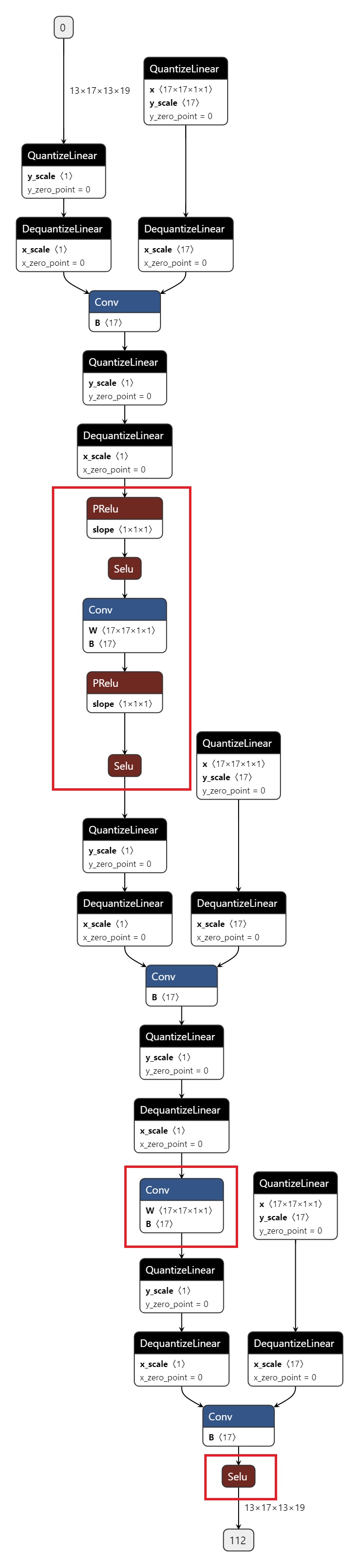

The exported ONNX model shown in the image contains CPU operators highlighted in red circles.

Guide to Analysis Tools

When encountering precision issues with QAT or quantized models, you can use various tools provided to analyze the models and identify precision drop points.

Overview

The following table summarizes the usage interfaces and scenarios of various tools. Except for the model visualization tool, all other tools are in the horizon_plugin_pytorch.utils.quant_profiler package.

| Tool | Usage Interface/Method | Scenario |

|---|---|---|

| Integration Interface | model_profiler | Call other debug tools and display the results centrally in an HTML page; Currently, it calls similarity, statistics, shared op check, fuse check, weight comparison, and quantization configuration check tools. |

| Fuse Check | check_unfused_operations | Check if there are op patterns in floating-point models that can be fused but are not fused. |

| Shared Op Check | get_module_called_count | Check if there are shared-used ops in the model. |

| Quantization Configuration Check | check_qconfig | Check if the quantization configuration in the QAT model meets expectations. |

| Model Visualization | export_to_onnx export_quantized_onnx | Export ONNX models to view the model structure. Does not support ONNX run. |

| Similarity Comparison | featuremap_similarity | Locate problematic ops when the precision of quantized models decreases. |

| Statistics | get_raw_features / profile_featuremap | Output numerical features of each layer's output in the model to evaluate whether the current data distribution and quantization precision are suitable for quantization. |

| Model Weight Comparison | compare_weights | Compare the similarity of weights in each layer of the model. |

| Step Quantization | qconfig=None | When training QAT models is difficult, identify the bottleneck of precision loss by setting a part of the model to floating point. |

| Single Operator Conversion Precision Debugging | set_preserve_qat_mode | When the precision of QAT model conversion to fixed point decreases, identify the bottleneck of precision loss by replacing some ops in the fixed-point model with QAT forms using this interface. |

| Heterogeneous Model Deployment Device Check | check_deploy_device | Check whether each op runs on BPU or CPU as expected during the deployment of heterogeneous models. |

| Comparison of TorchScript and HBDK Results | script_profile | Compare whether the results of each op in the fixed-point pt generated by horizon_plugin_pytorch and HBDK are consistent. |

| Comparison of Results of Different Versions of TorchScript | compare_script_models | Compare the results of each op in the fixed-point pt generated by horizon_plugin_pytorch using different versions. |

| Model CUDA Memory Consumption Analysis Tool | show_cuda_memory_consumption | Analyze the model's CUDA memory consumption to identify memory bottlenecks. |

Integrated Interface

For convenience in usage and visualization, horizon_plugin_pytorch provides an integrated interface model_profiler. This interface invokes other debug tools and consolidates the results into an HTML page, where the results of all other debug tools are also simultaneously saved. Currently, it invokes several tools including similarity analysis, statistics, shared operation check, fuse check, weight comparison, and quantization configuration check.

This interface involves the comparison between two models. In fx mode, the model conversion process is by default inplace. If you need to use this tool, please manually deepcopy the original model before conversion. Otherwise, after conversion, it will incorrectly compare two identical models.

# from horizon_plugin_pytorch.utils.quant_profiler import model_profiler

def model_profiler(

model1: torch.nn.Module,

model2: torch.nn.Module,

example_inputs: Any,

mode: str,

out_dir: Optional[str] = None,

kwargs_dict: Optional[dict] = None,

):

"""Run various inspection and analysis tools and display the results in one HTML page.

This function will compare:

1) The similarity and statistics of each op in two models, and the similarity of weights, while checking shared ops in the model.

2) Check if there are unfused patterns in the floating point model and the quantization configuration in the QAT model.

The results will be displayed in `profiler.html`.

Note:

1) This interface only supports comparing adjacent stages of the same model, in the order of conversion. For example, "floating point vs QAT" or "QAT vs fixed point". Comparing directly between floating point and fixed point models, or using the order of "QAT model vs floating point model" is not supported.

2) The ONNX visualization of the model structure as well as the histogram of feature maps of each layer are not displayed in the HTML page. You can manually call `export_to_onnx/export_quantized_onnx` and `profile_featuremap(with_tensorboard=True)`. In addition, this interface also supports passing custom arguments for calling each debug tool through the `kwargs_dict` parameter.

Parameters:

model1: Floating Point/Calibrated/QAT model

model2: Calibrated/QAT/Fixed Point model

example_inputs: Model input

mode: Indicates which two models to compare, only supports the following three modes

- `FvsQ`: Floating point model vs QAT/calibration model

- `QvsQ`: QAT model vs quantized model

- `CvsQ`: Calibration model vs QAT model

out_dir: Specify the result file `profiler.html` and the path for all debug tool invocation results. Default is `None`, which will generate a `profiler` directory in the `ckpt_dir` specified directory or the current directory, and store all results in that directory.

kwargs_dict: Parameters for calling other debug tools, provided as a `dict`. **You can refer to the specific introduction of each tool above for the specific parameters.** Support 7 key values

1) `featuremap_similarity`: similarity

2) `get_raw_features`: calculate the relevant features of each layer op input/output feature

3) `profile_featuremap`: statistics function, output the maximum, minimum, mean, and variance of each layer results in the model

4) `get_module_called_count`: check if there are shared ops in the model5) `check_unfused_operations`: Check if the model has unfused patterns

6) `compare_weights`: Compare the similarity of weights in two models

7) `check_qconfig`: Check the Qconfig configuration in the QAT model

Note:

1) The parameters `model` and `example_inputs` are defined in the `model_profiler` interface. The `kwargs_dict` must not have definitions for these two parameters.

2) The `out_dir` parameter in `kwargs_dict` will be replaced by the `out_dir` parameter in the `model_profiler` interface.

"""

Example usage:

from copy import deepcopy

import numpy as np

import pytest

import torch

from torch import nn

from torch.quantization import DeQuantStub, QuantStub

import horizon_plugin_pytorch as horizon

from horizon_plugin_pytorch import nn as horizon_nn

from horizon_plugin_pytorch.march import March, set_march

from horizon_plugin_pytorch.nn.quantized import FloatFunctional

from horizon_plugin_pytorch.qat_mode import QATMode, set_qat_mode

from horizon_plugin_pytorch.quantization import (

convert_fx,

prepare_qat_fx,

)

from horizon_plugin_pytorch.quantization.qconfig import (

default_qat_8bit_fake_quant_qconfig,

)

from horizon_plugin_pytorch.utils.quant_profiler import model_profiler

class Conv2dModule(nn.Module):

def __init__(

self,

in_channels,

out_channels,

kernel_size=1,

stride=1,

padding=0,

dilation=1,

groups=1,

bias=True,

padding_mode="zeros",

):

super().__init__()

self.conv2d = nn.Conv2d(

in_channels,

out_channels,

kernel_size,

stride,

padding,

dilation,

groups,

bias,

padding_mode,

)

self.add = FloatFunctional()

self.bn_mod = nn.BatchNorm2d(out_channels)

self.relu_mod = nn.ReLU()

def forward(self, x, y):

x = self.conv2d(x)

x = self.bn_mod(x)

x = self.add.add(x, y)

x = self.relu_mod(x)

return x

class TestFuseNet(nn.Module):

def __init__(self, channels) -> None:

super().__init__()

self.quantx = QuantStub()

self.quanty = QuantStub()

self.convmod1 = Conv2dModule(channels, channels)

self.convmod2 = Conv2dModule(channels, channels)

self.convmod3 = Conv2dModule(channels, channels)

self.shared_conv = nn.Conv2d(channels, channels, 1)

self.bn1 = nn.BatchNorm2d(channels)

self.bn2 = nn.BatchNorm2d(channels)

self.sub = FloatFunctional()

self.relu = nn.ReLU()

self.dequant = DeQuantStub()

def forward(self, x, y):

x = self.quantx(x)

y = self.quanty(y)

x = self.convmod1(x, y)

x = self.convmod2(y, x)

x = self.convmod3(x, y)

x = self.shared_conv(x)

x = self.bn1(x)

y = self.shared_conv(y)

y = self.bn2(y)

x = self.sub.sub(x, y)

x = self.relu(x)

return self.dequant(x)

# **RDK X3** sets BERNOULLI2, **RDK Ultra** sets BAYES.

set_march(March.BAYES)

device = torch.device("cpu")

data = torch.arange(1 * 3 * 4 * 4) / 100 + 1

data = data.reshape((1, 3, 4, 4))

data = data.to(torch.float32).to(device)

float_net = TestFuseNet(3).to(device)

float_net(data, data)

qat_net = prepare_qat_fx(float_net, {"": default_qat_8bit_fake_quant_qconfig})

qat_net = qat_net.to(device)

qat_net(data, data)

# Need to deepcopy the model before conversion in fx mode

qat_net2 = deepcopy(qat_net)

quantized_net = convert_fx(qat_net2)

model_profiler(qat_net, quantized_net, (data, data), mode="QvsQ")

If the out_dir parameter is not specified, a horizon_quant_debug folder will be generated in the current directory, and profiler.html and the results of various debug tools will be saved in that folder. For a detailed explanation of the output of each debug tool, please refer to the specific introduction of each tool below.

Fuse Check

The correctness of the model fuse involves two aspects:

- Whether the fusable operators have been fused.

- Whether the fused operators are correct.

This interface can only check the first case. For the second case, please use a similarity comparison tool to compare the feature similarity of the model before and after fusion. If you find that the feature similarity is problematic for all features after a certain operator, the fusion of this operator may be incorrect (the fusion process combines several ops into one, and uses Identity to replace other locations, so it is normal for feature similarity to be low at these Identity positions).

This interface only accepts input of floating-point models.

# from horizon_plugin_pytorch.utils.quant_profiler import check_unfused_operations

def check_unfused_operations(

model: torch.nn.Module,

example_inputs,

print_tabulate=True,

):

"""Check if there are unfused ops in the model.

This interface can only check if there are unfused ops. It cannot check the correctness of the fusion. If you want to check if the fusion of ops is correct,

please use the `featuremap_similarity` interface to compare the similarity between the pre-fusion and post-fusion models.

Parameters:

model: input model

example_inputs: model input parameters

print_tabulate: whether to print the result. The default is True.

Output:

List[List[str]]: a list of fusionable op patterns

Example Usage:

This is an example in eager mode (manually define fuse pattern and call fuse function). If using fx for quantization, all fusionable patterns in the model will be fused automatically.

import horizon_plugin_pytorch as horizon

import numpy as np

import torch

from horizon_plugin_pytorch import nn as horizon_nn

from horizon_plugin_pytorch.march import March, set_march

from horizon_plugin_pytorch.nn.quantized import FloatFunctional

from horizon_plugin_pytorch.utils.quant_profiler import check_unfused_operations

from torch import nn

from torch.quantization import DeQuantStub, QuantStub

class Conv2dModule(nn.Module):

def __init__(

self,

in_channels,

out_channels,

kernel_size=1,

stride=1,

padding=0,

dilation=1,

groups=1,

bias=True,

padding_mode="zeros",

):

super().__init__()

self.conv2d = nn.Conv2d(

in_channels,

out_channels,

kernel_size,

stride,

padding,

dilation,groups,

bias,

padding_mode,

)

self.add = FloatFunctional()

self.bn_mod = nn.BatchNorm2d(out_channels)

self.relu_mod = nn.ReLU()

def forward(self, x, y):

x = self.conv2d(x)

x = self.bn_mod(x)

x = self.add.add(x, y)

x = self.relu_mod(x)

return x

def fuse_model(self):

from horizon_plugin_pytorch.quantization import fuse_modules

fuse_list = ["conv2d", "bn_mod", "add", "relu_mod"]

fuse_modules(

self,

fuse_list,

inplace=True,

)

class TestFuseNet(nn.Module):

def __init__(self, channels) -> None:

super().__init__()

self.convmod1 = Conv2dModule(channels, channels)

self.convmod2 = Conv2dModule(channels, channels)

self.convmod3 = Conv2dModule(channels, channels)

self.shared_conv = nn.Conv2d(channels, channels, 1)

self.bn1 = nn.BatchNorm2d(channels)

self.bn2 = nn.BatchNorm2d(channels)

self.sub = FloatFunctional()

self.relu = nn.ReLU()

def forward(self, x, y):

x = self.convmod1(x, y)

x = self.convmod2(y, x)

x = self.convmod3(x, y)

x = self.shared_conv(x)

x = self.bn1(x)

y = self.shared_conv(y)

y = self.bn2(y)

x = self.sub.sub(x, y)

x = self.relu(x)

return x

def fuse_model(self):

self.convmod1.fuse_model()

self.convmod3.fuse_model()

shape = np.random.randint(10, 20, size=4).tolist()

data0 = torch.rand(size=shape)

data1 = torch.rand(size=shape)

float_net = TestFuseNet(shape[1])

float_net.fuse_model()

check_unfused_operations(float_net, (data0, data1))

The output result is as follows:

name type

------------------- ------------------------------------------------

shared_conv(shared) <class 'torch.nn.modules.conv.Conv2d'>

bn1 <class 'torch.nn.modules.batchnorm.BatchNorm2d'>

name type

------------------- ------------------------------------------------

shared_conv(shared) <class 'torch.nn.modules.conv.Conv2d'>

bn2 <class 'torch.nn.modules.batchnorm.BatchNorm2d'>

name type

----------------- --------------------------------------------------------------------------------

convmod2.conv2d <class 'torch.nn.modules.conv.Conv2d'>

convmod2.bn_mod <class 'torch.nn.modules.batchnorm.BatchNorm2d'>

convmod2.add <class 'horizon_plugin_pytorch.nn.quantized.functional_modules.FloatFunctional'>

convmod2.relu_mod <class 'torch.nn.modules.activation.ReLU'>

Each group of patterns that can be fused but are not fused will be outputted in a tabular format, with the first column indicating the name of the module defined in the model, and the second column indicating the type of the module.

Shared Operation Check

This interface calculates and prints the number of times each operation is called in one forward pass of the model, thereby checking for shared operations in the model. If a module instance appears multiple times in the model with different names, the function will use the first name and record all calls under that name (you may see related warnings).

# from horizon_plugin_pytorch.utils.quant_profiler import get_module_called_count

def get_module_called_count(

model: torch.nn.Module,

example_inputs,

check_leaf_module: callable = None,

print_tabulate: bool = True,

) -> Dict[str, int]:

"""Calculate the number of calls to leaf nodes in the model.

Parameters:

model: The model.

example_inputs: Input to the model.

check_leaf_module: Check whether the module is a leaf node. Default is None,

using the predefined is_leaf_module, treating all defined operations in

horizon_plugin_pytorch as well as unsupported floating point operations

as leaf nodes.

print_tabulate: Whether to print the results. Default is True.

Output:

Dict[str, int]: The name of each layer in the model and the corresponding

number of calls.

"""

Example usage:

import numpy as np

import torch

from torch import nn

from torch.quantization import DeQuantStub, QuantStub

import horizon_plugin_pytorch as horizon

from horizon_plugin_pytorch import nn as horizon_nn

from horizon_plugin_pytorch.march import March, set_march

from horizon_plugin_pytorch.nn.quantized import FloatFunctional

from horizon_plugin_pytorch.utils.quant_profiler import get_module_called_count

class Net(nn.Module):

def __init__(self, quant=False, share_op=True):

super(Net, self).__init__()

self.quant_stubx = QuantStub()

self.quant_stuby = QuantStub()

self.mul_op = FloatFunctional()

self.cat_op = FloatFunctional()

self.quantized_ops = nn.Sequential(

nn.ReLU(),

nn.Sigmoid(),

nn.Softmax(),

nn.SiLU(),

horizon_nn.Interpolate(

scale_factor=2, recompute_scale_factor=True

),

horizon_nn.Interpolate(

scale_factor=2.3, recompute_scale_factor=True

),

nn.AvgPool2d(kernel_size=4),

nn.Upsample(scale_factor=1.3, mode="bilinear"),

nn.UpsamplingBilinear2d(scale_factor=0.7),

)

self.dequant_stub = DeQuantStub()

self.float_ops = nn.Sequential(

nn.Tanh(),

nn.LeakyReLU(),

nn.PReLU(),

nn.UpsamplingNearest2d(scale_factor=0.7),

)

self.quant = quant

self.share_op = share_op

def forward(self, x, y):

x = self.quant_stubx(x)

y = self.quant_stuby(y)

z = self.mul_op.mul(x, y)

x = self.cat_op.cat((x, y), dim=1)

if self.share_op:

x = self.cat_op.cat((x, y), dim=1)

x = self.quantized_ops(x)

x = self.dequant_stub(x)

if not self.quant:

x = self.float_ops(x)

return x

shape = np.random.randint(10, 20, size=4).tolist()

data0 = torch.rand(size=shape)

data1 = torch.rand(size=shape)

float_net = Net()

get_module_called_count(float_net, (data0, data1))

The output is a table that records the number of times each module in the model is called. Normally, each module is called once; if it is called 0 times, it means that the module is defined but not used; if it is called more than once, it means that the module is shared and used multiple times:

name called times

--------------- --------------

quant_stubx 1

quant_stuby 1

unused 0

mul_op 1

cat_op 2

quantized_ops.0 1

quantized_ops.1 1

quantized_ops.2 1

quantized_ops.3 1

quantized_ops.4 1

quantized_ops.5 1

quantized_ops.6 1

quantized_ops.7 1

quantized_ops.8 1

dequant_stub 1

float_ops.0 1

float_ops.1 1

float_ops.2 1

float_ops.3 1

Quantization Configuration Check

Check the quantization configurations for each op of the calibration/QAT model. The input must be a QAT or calibration model. The output will be saved in the qconfig_info.txt file.

# from horizon_plugin_pytorch.utils.quant_profiler import check_qconfig

def check_qconfig(

model: torch.nn.Module,

example_inputs: Any,

prefixes: Tuple = (),

types: Tuple = (),

custom_check_func: Optional[Callable] = None,

out_dir: Optional[str] = None,

):

"""Check the quantization configurations for the calibration/QAT model.

This function will

1) Check the quantization configurations for the output activations and weights

of each layer in the model. The configuration information will be saved in the

`qconfig_info.txt` file.

2) Check the input and output types for each layer in the model.

By default, the function will print a warning message for the following cases:

1) Output layer activation is not quantized.

2) Fixed scale.

3) Weight is quantized to a non-int8 type (currently only int8 quantization is supported).

4) Input and output types of the model are different.

If you want to check for more information, you can pass a custom check function

through `custom_check_func`.

Parameters:

model: The input model, must be a QAT model.

example_inputs: Model inputs.

prefixes: Specify the layer names (starting with prefixes) of the ops to check

the quantization configurations.

types: Specify the types of the ops to check the quantization configurations.

custom_check_func: Custom function for checking additional information. This

function will be called within a module's hook, so it needs to be defined in the

following format:

func(module, input, output) -> None

out_dir: The path to save the result file `qconfig_info.txt`. If None, it will

be saved in the current path.

"""

Example usage:

import numpy as np

import torch

from horizon_plugin_pytorch import nn as horizon_nn

from horizon_plugin_pytorch.dtype import qint16

from horizon_plugin_pytorch.march import March, set_march

from horizon_plugin_pytorch.nn.quantized import FloatFunctional

from horizon_plugin_pytorch.quantization import get_default_qconfig

from horizon_plugin_pytorch.quantization.qconfig import (

default_qat_8bit_fake_quant_qconfig,

)

from horizon_plugin_pytorch.quantization.quantize_fx import (

convert_fx,

prepare_qat_fx,

)

from horizon_plugin_pytorch.quantization.observer import FixedScaleObserver

from horizon_plugin_pytorch.utils.quant_profiler import check_qconfig

from torch import nn

from torch.quantization import DeQuantStub, QuantStub

class Conv2dModule(nn.Module):

def __init__(

self,

in_channels,

out_channels,

kernel_size=1,

stride=1,

padding=0,

dilation=1,

groups=1,

bias=True,

padding_mode="zeros",

):

super().__init__()

self.conv2d = nn.Conv2d(

in_channels,

out_channels,

kernel_size,

stride,

padding,

dilation,

groups,

bias,

padding_mode,

)self.add = FloatFunctional()

self.bn_mod = nn.BatchNorm2d(out_channels)

self.relu_mod = nn.ReLU()

def forward(self, x, y):

x = self.conv2d(x)

x = self.bn_mod(x)

x = self.add.add(x, y)

x = self.relu_mod(x)

return x

class TestFuseNet(nn.Module):

def __init__(self, channels) -> None:

super().__init__()

self.convmod1 = Conv2dModule(channels, channels)

self.convmod2 = Conv2dModule(channels, channels)

self.convmod3 = Conv2dModule(channels, channels)

self.shared_conv = nn.Conv2d(channels, channels, 1)

self.bn1 = nn.BatchNorm2d(channels)

self.bn2 = nn.BatchNorm2d(channels)

self.sub = FloatFunctional()

self.relu = nn.ReLU()

def forward(self, x, y):

x = self.convmod1(x, y)

x = self.convmod2(y, x)

x = self.convmod3(x, y)

x = self.shared_conv(x)

x = self.bn1(x)

y = self.shared_conv(y)

y = self.bn2(y)

x = self.sub.sub(x, y)

x = self.relu(x)

return x

float_net = TestFuseNet(3)

# **RDK X3** set BERNOULLI2, **RDK Ultra** set BAYES

set_march(March.BAYES)

# Manually construct unsupported or special cases

sub_qconfig = get_default_qconfig(

# Fixed sub's output scale

activation_qkwargs={

"observer": FixedScaleObserver,

"scale": 1 / 2 ** 15,"dtype": qint16,

}

)

qat_net = prepare_qat_fx(

float_net,

{

"": get_default_qconfig(

weight_qkwargs={

"qscheme": torch.per_channel_symmetric,

"ch_axis": 0,

# Does not support int16 quantization for weight

"dtype": qint16,

}

),

"module_name": [("sub", sub_qconfig)]

}

)

shape = np.random.randint(10, 20, size=4).tolist()

shape[1] = 3

data0 = torch.rand(size=shape)

data1 = torch.rand(size=shape)

check_qconfig(qat_net, (data0, data1))

Output:

-

qconfig_info.txt

Each layer out qconfig:

+-------------------+----------------------------------------------------------------------------+--------------------+-------------+----------------+

| Module Name | Module Type | Input dtype | out dtype | ch_axis |

|-------------------+----------------------------------------------------------------------------+--------------------+-------------+----------------|

| quantx | <class 'horizon_plugin_pytorch.nn.qat.stubs.QuantStub'> | torch.float32 | qint8 | -1 |

| quanty | <class 'horizon_plugin_pytorch.nn.qat.stubs.QuantStub'> | torch.float32 | qint8 | -1 |

| convmod1.add | <class 'horizon_plugin_pytorch.nn.qat.conv2d.ConvAddReLU2d'> | ['qint8', 'qint8'] | qint8 | -1 |

| convmod2.conv2d | <class 'horizon_plugin_pytorch.nn.qat.conv2d.Conv2d'> | qint8 | qint8 | -1 |

| convmod2.bn_mod | <class 'horizon_plugin_pytorch.nn.qat.batchnorm.BatchNorm2d'> | qint8 | qint8 | -1 |

| convmod2.add[add] | <class 'horizon_plugin_pytorch.nn.qat.functional_modules.FloatFunctional'> | ['qint8', 'qint8'] | qint8 | -1 |

| convmod2.relu_mod | <class 'horizon_plugin_pytorch.nn.qat.relu.ReLU'> | qint8 | qint8 | qconfig = None |

| convmod3.add | <class 'horizon_plugin_pytorch.nn.qat.conv2d.ConvAddReLU2d'> | ['qint8', 'qint8'] | qint8 | -1 |

| shared_conv | <class 'horizon_plugin_pytorch.nn.qat.conv2d.Conv2d'> | qint8 | qint8 | -1 |

| bn1 | <class 'horizon_plugin_pytorch.nn.qat.batchnorm.BatchNorm2d'> | qint8 | qint8 | -1 |

| shared_conv(1) | <class 'horizon_plugin_pytorch.nn.qat.conv2d.Conv2d'> | qint8 | qint8 | -1 |

| bn2 | <class 'horizon_plugin_pytorch.nn.qat.batchnorm.BatchNorm2d'> | qint8 | qint8 | -1 |

| sub[sub] | <class 'horizon_plugin_pytorch.nn.qat.functional_modules.FloatFunctional'> | ['qint8', 'qint8'] | qint16 | -1 |

| relu | <class 'horizon_plugin_pytorch.nn.qat.relu.ReLU'> | qint16 | qint16 | qconfig = None |

+-------------------+----------------------------------------------------------------------------+--------------------+-------------+----------------+

Weight qconfig:

+-----------------+--------------------------------------------------------------+----------------+-----------+

| Module Name | Module Type | weight dtype | ch_axis |

|-----------------+--------------------------------------------------------------+----------------+-----------|

| convmod1.add | <class 'horizon_plugin_pytorch.nn.qat.conv2d.ConvAddReLU2d'> | qint16 | 0 |

| convmod2.conv2d | <class 'horizon_plugin_pytorch.nn.qat.conv2d.Conv2d'> | qint16 | 0 |

| convmod3.add | <class 'horizon_plugin_pytorch.nn.qat.conv2d.ConvAddReLU2d'> | qint16 | 0 |

| shared_conv | <class 'horizon_plugin_pytorch.nn.qat.conv2d.Conv2d'> | qint16 | 0 |

| shared_conv(1) | <class 'horizon_plugin_pytorch.nn.qat.conv2d.Conv2d'> | qint16 | 0 |

+-----------------+--------------------------------------------------------------+----------------+-----------+

Please check if these OPs qconfigs are expected..

+-----------------+----------------------------------------------------------------------------+------------------------------------------------------------------+

| Module Name | Module Type | Msg |

|-----------------+----------------------------------------------------------------------------+------------------------------------------------------------------|

| convmod1.add | <class 'horizon_plugin_pytorch.nn.qat.conv2d.ConvAddReLU2d'> | qint16 weight!!! |

| convmod2.conv2d | <class 'horizon_plugin_pytorch.nn.qat.conv2d.Conv2d'> | qint16 weight!!! |

| convmod3.add | <class 'horizon_plugin_pytorch.nn.qat.conv2d.ConvAddReLU2d'> | qint16 weight!!! |

| shared_conv | <class 'horizon_plugin_pytorch.nn.qat.conv2d.Conv2d'> | qint16 weight!!! |

| shared_conv(1) | <class 'horizon_plugin_pytorch.nn.qat.conv2d.Conv2d'> | qint16 weight!!! |

| sub[sub] | <class 'horizon_plugin_pytorch.nn.qat.functional_modules.FloatFunctional'> | input dtype ['qint8', 'qint8'] is not same with out dtype qint16 |