Principles and Steps of PTQ

Introduction

Model conversion refers to the process of converting the original floating-point model to the Heterogeneous Line model. The original floating-point model (also referred to as the floating-point model in some places in this article) refers to the model you trained using DL frameworks such as TensorFlow/PyTorch, with a computational precision of float32. The Heterogeneous Line model is a model format that is suitable for running on the Heterogeneous Line processor. This chapter will repeatedly use these two model terms. To avoid misunderstandings, please understand this concept before reading further.

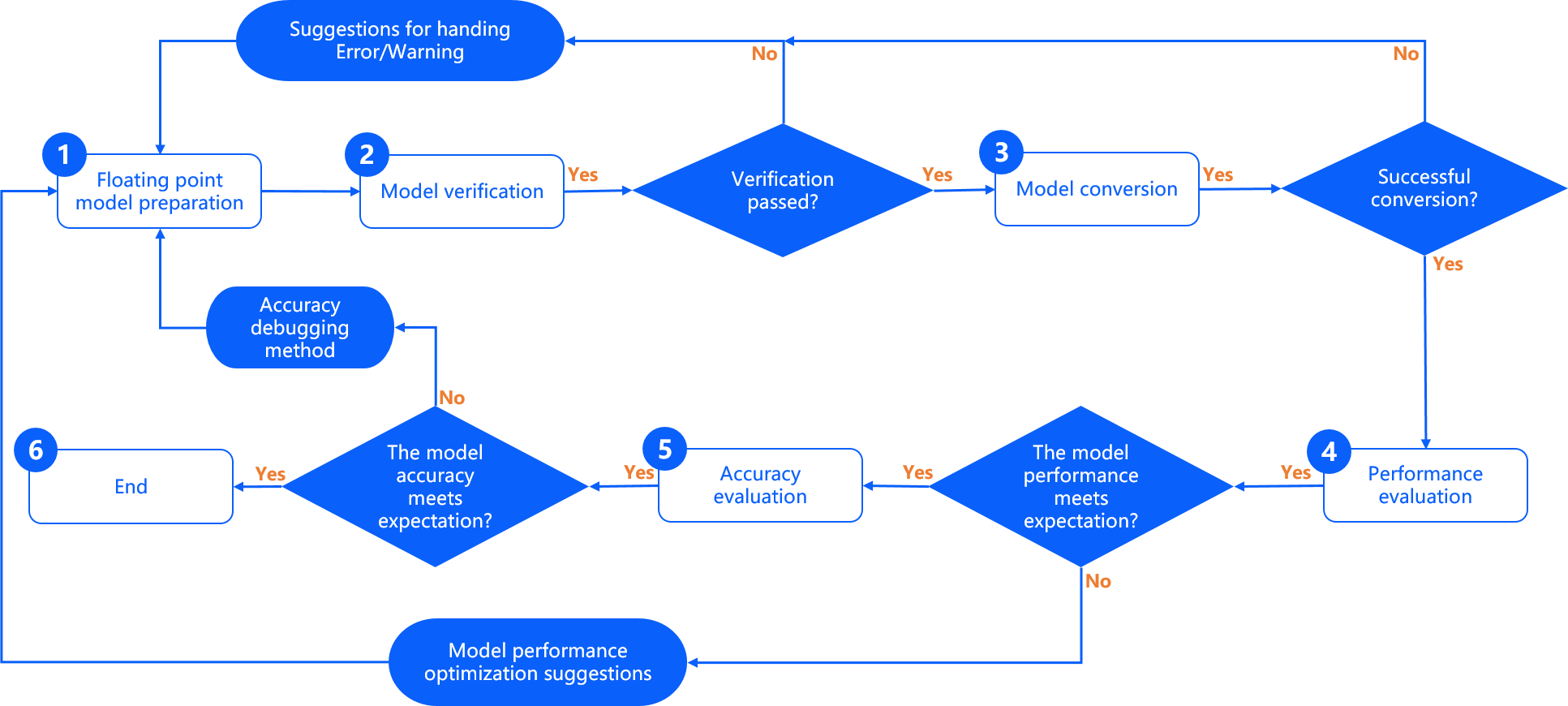

The complete development process of the model with the Heterogeneous Line algorithm toolchain requires five important stages: Floating-point Model Preparation, Model Verification, Model Conversion, Performance Evaluation, and Accuracy Evaluation, as shown in the following diagram:

Floating-point Model Preparation This stage is used to ensure that the format of the original floating-point model is supported by the Heterogeneous Line model conversion tool. The original floating-point model is obtained from the available model trained by DL frameworks such as TensorFlow/PyTorch. For specific floating-point model requirements and recommendations, please refer to the Floating-point Model Preparation section.

Model Verification This stage is used to verify whether the original floating-point model meets the requirements of the Heterogeneous Line algorithm toolchain. Heterogeneous Line provides the hb_mapper checker tool for checking floating-point models. For specific usage, please refer to the Model Verification section.

Model Conversion This stage is used to perform the conversion from the floating-point model to the Heterogeneous Line heterogeneous model. After this stage, you will obtain a model that can run on the Heterogeneous Line processor. Heterogeneous Line provides the hb_mapper makertbin conversion tool to complete key steps such as model optimization, quantization, and compilation. For specific usage, please refer to the Model Conversion section.

Performance Evaluation This stage is mainly used to evaluate the inference performance of the Heterogeneous Line heterogeneous model. Heterogeneous Line provides tools for model performance evaluation, which you can use to verify whether the model performance meets the application requirements. For specific usage instructions, please refer to the Model Performance Analysis and Tuning section.

Accuracy Evaluation This stage is mainly used to evaluate the inference accuracy of the Heterogeneous Line heterogeneous model. Heterogeneous Line provides tools for model accuracy evaluation. For specific usage instructions, please refer to the Model Accuracy Analysis and Tuning section.

Model Preparation

The floating-point model obtained from publicly available DL frameworks is the input for the Heterogeneous Line model conversion tool. Currently, the conversion tool supports the following DL frameworks:

| Framework | Caffe | PyTorch | TensorFlow | MXNet | PaddlePaddle |

|---|---|---|---|---|---|

| Heterogeneous Line Toolchain | Supported | Supported (via ONNX conversion) | Supported (via ONNX conversion) | Supported (via ONNX conversion) | Supported (via ONNX conversion) |

Among these frameworks, the caffemodel exported by the Caffe framework is directly supported. PyTorch, TensorFlow, MXNet, and other DL frameworks are indirectly supported through conversion to the ONNX format.

For the conversion from different frameworks to ONNX, there are currently corresponding standard solutions, as follows:

-

Pytorch2Onnx: PyTorch official API supports exporting models directly as ONNX models. Refer to the link: https://pytorch.org/tutorials/advanced/super_resolution_with_onnxruntime.html

-

Tensorflow2Onnx: Conversion based on the onnx/tensorflow-onnx in the ONNX community. Refer to the link: https://github.com/onnx/tensorflow-onnx

-

MXNet2Onnx: MXNet official API supports exporting models directly as ONNX models. Refer to the link:https://github.com/dotnet/machinelearning/blob/main/test/Microsoft.ML.Tests/OnnxConversionTest.cs

-

More ONNX conversion support for other frameworks, please refer to the link: https://github.com/onnx/tutorials#converting-to-onnx-format

We also provide tutorials on how to export ONNX and visualize models for Pytorch, PaddlePaddle, and TensorFlow2 frameworks. Please refer to:

-

Operators used in floating-point models need to comply with the operator constraint conditions of the D-Robotics algorithm toolchain. Please refer to the Supported Operator List section for details.

-

Currently, the conversion tool only supports the conversion of models with output count less than or equal to 32.

-

Supports quantization of

caffe 1.0version floating-point models andir_version ≤ 7,opset=10,opset=11versions of ONNX floating-point models into fixed-point models supported by Horizon. For the mapping between the IR version of the ONNX model and the ONNX version, please refer to the ONNX official documentation. -

Model input dimensions only support

fixed 4 dimensionsinput NCHW or NHWC (N dimension can only be 1), for example: 1x3x224x224 or 1x224x224x3. Dynamic dimensions and non-4D inputs are not supported. -

Do not include

post-processing operatorsin floating-point models, such as NMS operators.

Model Validation

Before formally converting the model, please use the hb_mapper checker tool to validate the model and ensure that it complies with the constraints supported by the D-Robotics processor.

It is recommended to refer to the script methods 01_check_X3.sh or 01_check_Ultra.sh in the model conversion horizon_model_convert_sample example package of D-Robotics for examples of caffe, onnx, and other models.

Validate the model using the hb_mapper checker tool

The usage of the hb_mapper checker tool is as follows:

hb_mapper checker --model-type $`{`model_type`}` \

--march $`{`march`}` \

--proto $`{`proto`}` \

--model $`{`caffe_model/onnx_model`}` \

--input-shape $`{`input_node`}` $`{`input_shape`}` \

--output $`{`output`}`

hb_mapper checker parameters explanation:

--model-type

Specifies the model type of the input for checking, currently only supports setting caffe or onnx.

--march

Specifies the D-Robotics processor type to be adapted, can be set to bernoulli2 or bayes; set to bernoulli2 for RDK X3 and bayes for RDK Ultra.

--proto

This parameter is only useful when model-type is set to caffe, and its value is the prototxt file name of the Caffe model.

--model

When model-type is specified as caffe, its value is the caffemodel file name of the Caffe model.

When model-type is specified as onnx, its value is the name of the ONNX model file.

--input-shape

Optional parameter, explicitly specifies the input shape of the model.

Its value is {`input_name`}` `{`NxHxWxC/NxCxHxW`}, with a space between input_name and the shape.

For example, if the model input is named data1 and the input shape is [1,224,224,3],

then the configuration should be --input-shape data1 1x224x224x3.

If the configured shape here is inconsistent with the shape information inside the model, the shape configured here takes precedence.

Note that --input-shape only accepts one name-shape combination. If your model has multiple input nodes,

you can configure the --input-shape parameter multiple times in the command.

The --output parameter has been deprecated. The log information is stored in hb_mapper_checker.log by default.

Handling Exceptions in Checking

If the model checking step terminates abnormally or error messages are displayed, it means that the model verification fails. Please refer to the error information printed on the terminal or the hb_mapper_checker.log log file generated in the current path for error information and modification suggestions.

For example: The following configuration contains an unrecognized operator type Accuracy:

layer `{`

name: "data"

type: "Input"

top: "data"

input_param `{` shape: `{` dim: 1 dim: 3 dim: 224 dim: 224 `}` `}`

`}`

layer `{`

name: "Convolution1"

type: "Convolution"

bottom: "data"

top: "Convolution1"

convolution_param `{`

num_output: 128

bias_term: false

pad: 0

kernel_size: 1

group: 1

stride: 1

weight_filler `{`

type: "msra"

`}`

`}`

`}`

layer `{`

name: "accuracy"

type: "Accuracy"

bottom: "Convolution3"

top: "accuracy"

include `{`

phase: TEST

`}`

`}`

After using hb_mapper checker to check this model, you will get the following information in the hb_mapper_checker.log:

ValueError: Not support layer name=accuracy type=Accuracy

- If the model check step is terminated abnormally or there is an error message, it means that the model verification fails. Please confirm the error message and modification suggestions according to the terminal print or the generated

hb_mapper_checker.loglog file in the current path. You can find the solution to the error in the "Model Quantization Errors and Solutions" section. If the above steps still cannot resolve the problem, please contact the D-Robotics technical support team or submit your question in the D-Robotics Official Developer Community. We will provide support within 24 hours.

Interpretation of the check results

If there is no ERROR, then the check is successful. The hb_mapper checker tool will directly output the following information:

==============================================

Node ON Subgraph Type

----------

conv1 BPU id(0) HzSQuantizedConv

conv2_1/dw BPU id(0) HzSQuantizedConv

conv2_1/sep BPU id(0) HzSQuantizedConv

conv2_2/dw BPU id(0) HzSQuantizedConv

conv2_2/sep BPU id(0) HzSQuantizedConvconv3_1/dw BPU id(0) HzSQuantizedConv

conv3_1/sep BPU id(0) HzSQuantizedConv

...

The result of each line represents the checking status of a model node, with four columns: Node, ON, Subgraph, and Type. They represent the node name, the hardware on which the node is executed, the subgraph to which the node belongs, and the D-Robotics operator name to which the node is mapped. If the model contains CPU operators in the network structure, the hb_mapper checker tool will split the part before and after the CPU operator into two subgraphs.

Optimization Guide for Checking Results

Ideally, all operators in the model's network structure should run on the BPU, which means there is only one subgraph. If there are CPU operators causing multiple subgraphs to be split, the "hb_mapper checker" tool will provide the specific reasons for the appearance of the CPU operators. Below are examples of model verification on RDK X3 and RDK Ultra.

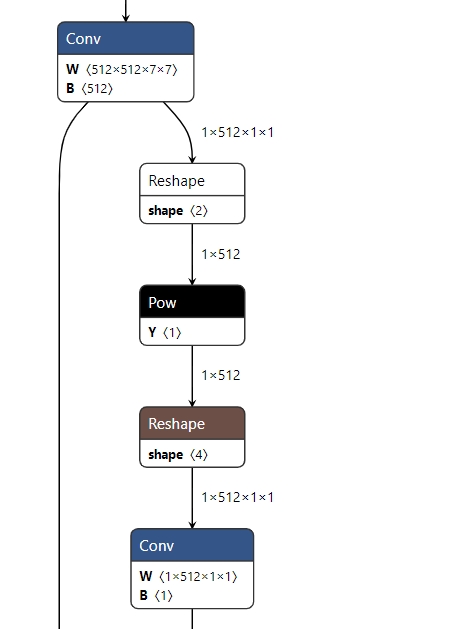

- The Caffe model running on "RDK X3" has a structure of Reshape + Pow + Reshape. According to the operator constraint list on "RDK X3", we can see that the Reshape operator is currently running on the CPU, and the shape of Pow is also non-4D, which does not meet the constraints of the X3 BPU operator.

Therefore, the final checking result of the model will also show segmentation, as follows:

2022-05-25 15:16:14,667 INFO The converted model node information:

====================================================================================

Node ON Subgraph Type

-------------

conv68 BPU id(0) HzSQuantizedConv

sigmoid16 BPU id(0) HzLut

axpy_prod16 BPU id(0) HzSQuantizedMul

UNIT_CONV_FOR_eltwise_layer16_add_1 BPU id(0) HzSQuantizedConv

prelu49 BPU id(0) HzPRelu

fc1 BPU id(0) HzSQuantizedConv

fc1_reshape_0 CPU -- Reshape

fc_output/square CPU -- Pow

fc_output/sum_pre_reshape CPU -- Reshape

fc_output/sum BPU id(1) HzSQuantizedConv

fc_output/sum_reshape_0 CPU -- Reshape

fc_output/sqrt CPU -- Pow

fc_output/expand_pre_reshape CPU -- Reshape

fc_output/expand BPU id(2) HzSQuantizedConv

fc1_reshape_1 CPU -- Reshape

fc_output/expand_reshape_0 CPU -- Reshape

fc_output/op CPU -- Mul

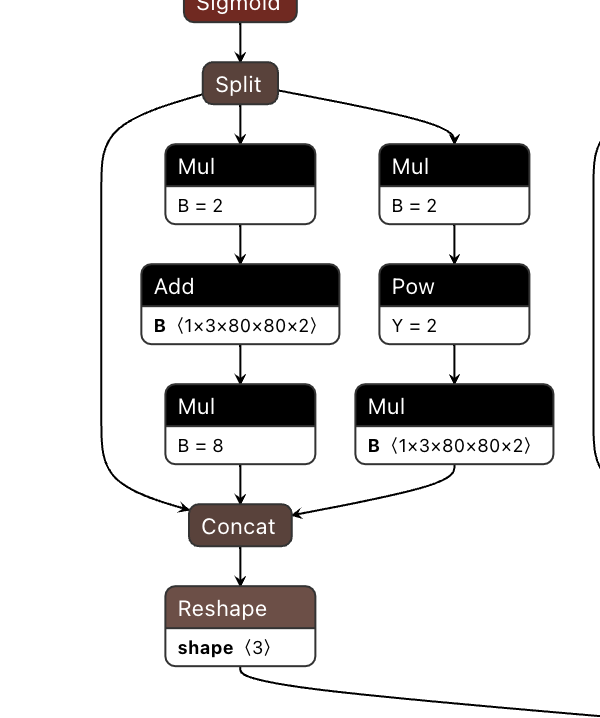

- The ONNX model running on "RDK Ultra" has a structure of Mul + Add + Mul. According to the operator constraint list on "RDK Ultra", we can see that Mul and Add operators are supported on five dimensions for BPU execution, but they need to meet the constraints of the Ultra BPU operators; otherwise, they will fall back to CPU computation.

Therefore, the final checking result of the model will also show segmentation, as follows:

====================================================================================

Node ON Subgraph Type

-------------------------------------------------------------------------------------

Reshape_199 BPU id(0) Reshape

Transpose_200 BPU id(0) Transpose

Sigmoid_201 BPU id(0) HzLut

Split_202 BPU id(0) Split

Mul_204 CPU -- Mul

Add_206 CPU -- Add

Mul_208 CPU -- Mul

Mul_210 CPU -- Mul

Pow_211 BPU id(1) HzLut

Mul_213 CPU -- Mul

Concat_214 CPU -- Concat

Reshape_215 CPU -- Reshape

Conv_216 BPU id(0) HzSQuantizedConv

Reshape_217 BPU id(0) Reshape

Transpose_218 BPU id(0) Transpose

Sigmoid_219 BPU id(0) HzLut

Split_220 BPU id(0) Split

Mul_222 CPU -- Mul

Add_224 CPU -- Add

Mul_226 CPU -- Mul

Mul_228 CPU -- Mul

Pow_229 BPU id(2) HzLut

Mul_231 CPU -- Mul

Concat_232 CPU -- Concat

Reshape_233 CPU -- Reshape

Conv_234 BPU id(0) HzSQuantizedConv

Reshape_235 BPU id(0) Reshape

Transpose_236 BPU id(0) Transpose

Sigmoid_237 BPU id(0) HzLut

Split_238 BPU id(0) Split

Mul_240 CPU -- Mul

Add_242 CPU -- Add

Mul_244 CPU -- Mul

Mul_246 CPU -- Mul

Pow_247 BPU id(3) HzLut

Mul_249 CPU -- Mul

Concat_250 CPU -- Concat

Reshape_251 CPU -- Reshape

According to the hints provided by hb_mapper checker, generally, the operators running on BPU will have better performance. In this case, you can remove the CPU operators like pow and reshape from the model and calculate the corresponding functions in the post-processing to reduce the number of subgraphs.

However, multiple subgraphs will not affect the overall conversion process but will affect the performance of the model to a large extent. It is recommended to adjust the model operators to run on BPU as much as possible. You can refer to the BPU operator support list in the D-Robotics Processor Operator Support List to replace the CPU operators with BPU operators with the same functions or move the CPU operators in the model to the pre- and post-processing of the model for CPU calculations.

Model conversion

The model conversion phase will convert the floating-point model to a D-Robotics heterogeneous model, and after this phase, you will have a model that can run on the D-Robotics processor.

Before performing the conversion, please make sure that the validation model process has been successfully passed.

The model conversion is completed using the hb_mapper makertbin tool, during which important processes such as model optimization and calibration quantization will be performed. Calibration requires preparing calibration data according to the model preprocessing requirements.

In order to facilitate your comprehensive understanding of model conversion, this section will introduce calibration data preparation, conversion tool usage, conversion internal process interpretation, conversion result interpretation, and conversion output interpretation in order.

Preparing Calibration Data

During the model conversion, the calibration stage will require about 100 calibration input samples, and each sample is an independent data file. To ensure the accuracy of the converted model, we hope that these calibration samples come from the training set or validation set you used to train the model, and not use very rare abnormal samples, such as solid color images, images without any detection or classification objects, etc.

In the conversion configuration file, the "preprocess_on" parameter corresponds to two different preprocessing sample requirements under the enabled and disabled states, respectively. (For detailed configuration of parameters, please refer to the relevant instructions in the calibration parameter group below)

When "preprocess_on" is disabled, you need to perform the same preprocessing of the samples taken from the training set/validation set as the model inference before the calibration. The calibrated samples after processing will have the same data type ("input_type_train"), size ("input_shape"), and layout ("input_layout_train") as the original model.

For models with featuremap inputs, you can use the "numpy.tofile" command to save the data as a float32 format binary file. The tool chain will read it based on the "numpy.fromfile" command during calibration.

For example, for the original floating-point model trained using ImageNet for classification, which has only one input node, the input information is described as follows:

- Input type: BGR

- Input layout: NCHW

- Input size: 1x3x224x224

The data preprocessing for model inference using the validation set is as follows:

- Scale the image to maintain the aspect ratio, with the shorter side scaled to 256.

- Use the "center_crop" method to obtain a 224x224 size image.

- Subtract the mean by channel.

- Multiply the data by a scale factor.

The sample processing code for the example model mentioned above is as follows:

To avoid excessive code length, the implementation code of various simple transformers is not included. For specific usage, please refer to the chapter "Transformer Usage" in the Toolchain section.

It is recommended to refer to the preprocessing steps of the sample models in the D-Robotics model conversion "horizon_model_convert_sample" sample package, such as caffe and onnx models: "02_preprocess.sh" and "preprocess.py".

# This example uses skimage, there may be differences if using opencv

# It is worth noting that the transformers do not reflect the mean subtraction and scale multiplication operations

# The mean and scale operations have been integrated into the model, please refer to the norm_type/mean_value/scale_value configuration below

def data_transformer():

transformers = [

# Scale the long side and short side to maintain the aspect ratio, with the shorter side scaled to 256

ShortSideResizeTransformer(short_size=256),

# Use CenterCrop to obtain a 224x224 image

CenterCropTransformer(crop_size=224),

# Convert NHWC layout from skimage to NCHW layout required by the model

HWC2CHWTransformer(),

# Convert channel order from RGB to BGR required by the model

RGB2BGRTransformer(),

# Adjust the value range from [0.0, 1.0] to the value range required by the model

ScaleTransformer(scale_value=255)

]

return transformers

# src_image represents the original image in the calibration set

# dst_file represents the file name for storing the final calibration sample data

def convert_image(src_image, dst_file, transformers):

image = skimage.img_as_float(skimage.io.imread(src_file))

for trans in transformers:

image = trans(image)

# The input_type_train specified by the model is UINT8 for BGR value type

image = image.astype(np.uint8)

# Store the calibration sample data in binary format to the data file

image.tofile(dst_file)

if __name__ == '__main__':

# The following represents the original calibration image set (pseudo code)

src_images = ['ILSVRC2012_val_00000001.JPEG', ...]

# The following represents the final calibration file name (no restrictions on the file extension) (pseudo code)

# calibration_data_bgr_f32 is cal_data_dir specified in your configuration file

dst_files = ['./calibration_data_bgr_f32/ILSVRC2012_val_00000001.bgr', ...]

transformers = data_transformer()

for src_image, dst_file in zip(src_images, dst_files):

convert_image(src_image, dst_file, transformers)

When preprocess_on is enabled, the calibration samples can be in any image format supported by skimage. The conversion tool will resize these images to the size required by the input node of the model, and use the result as the input for calibration. This operation is simple but does not guarantee the accuracy of quantization, so we strongly recommend that you use the method of disabling preprocess_on.

Please note that the input_shape parameter in the YAML file specifies the input data size of the original float model. If it is a dynamic input model, you can set the input size after conversion using this parameter, and the size of the calibration data should be the same as input_shape.

For example: If the shape of the input node of the original float model is ?x3x224x224 (where "?" indicates a placeholder, i.e., the first dimension of the model is a dynamic input), and the input_shape in the conversion configuration file is set to 8x3x224x224, then the size of each calibration data that the user needs to prepare is 8x3x224x224. (Note that models with input shapes where the first dimension is not 1 do not support modifying the model batch information through the input_batch parameter.)

Convert the Model Using the hb_mapper makertbin Tool

hb_mapper makertbin provides two modes, with and without the fast-perf mode enabled.When the "fast-perf" mode is enabled, it will generate a bin model that can run at the highest performance on the board during the conversion process. The tool mainly performs the following operations:

-

Run BPU executable operators on the BPU as much as possible (if using "RDK Ultra", you can specify the operators running on the BPU through the node_info parameter in the yaml file. "RDK X3" is automatically optimized and cannot specify operators through the yaml configuration file).

-

Delete CPU operators at the beginning and end of the model that cannot be deleted, including: Quantize/Dequantize, Transpose, Cast, Reshape, etc.

-

Compile the model with the highest performance optimization level O3.

It is recommended to refer to the script methods of the example models in the D-Robotics Model Conversion "horizon_model_convert_sample" package, such as caffe and onnx example models: "03_build_X3.sh" or "03_build_Ultra.sh".

The usage of the hb_mapper makertbin command is as follows:

Without enabling the "fast-perf" mode:

hb_mapper makertbin --config ${config_file} \

--model-type ${model_type}

With the "fast-perf" mode enabled:

hb_mapper makertbin --fast-perf --model ${caffe_model/onnx_model`}` --model-type $`{`model_type`}` \

--proto ${caffe_proto} \

--march ${march}

Explanation of hb_mapper makertbin parameters:

--help

Display help information and exit.

-c, --config

The configuration file for model compilation, in yaml format. The file name uses the .yaml suffix. The complete configuration file template is as follows.

--model-type

Used to specify the model type for conversion input, currently supports setting "caffe" or "onnx".

--fast-perf

Enable the fast-perf mode. After enabling this mode, it will generate a bin model that can run at the highest performance on the board during the conversion process, making it convenient for you to evaluate the model's performance.

If you enable the fast-perf mode, you also need to configure the following:

--model

Caffe or ONNX floating-point model file.--proto

Used to specify the prototxt file of the Caffe model.

--march Microarchitecture of BPU. Set it to "bernoulli2" if using "RDK X3", and set it to "bayes" if using "RDK Ultra".

-

For "RDK X3 yaml configuration file", fill in the template file RDK X3 Caffe model quantization yaml template or RDK X3 ONNX model quantization yaml template directly.

-

For "RDK Ultra yaml configuration file", fill in the template file RDK Ultra Caffe model quantization yaml template or RDK Ultra ONNX model quantization yaml template directly.

-

If the hb_mapper makertbin step terminates abnormally or shows an error message, it means that the model conversion has failed. Please check the error message and modification suggestions in the terminal printout or in the

hb_mapper_makertbin.loglog file generated in the current path. You can find the solution for the error in the Model Quantization Errors and Solutions section. If the problem cannot be solved after these steps, please contact the D-Robotics technical support team or submit your question in the D-Robotics Official Technical Community. We will provide support within 24 hours.

Explanation of Parameters in Model Conversion YAML Configuration

Either a Caffe model or an ONNX model can be used, that is, either caffe_model + prototxt or onnx_model can be chosen.

In other words, either a Caffe model or an ONNX model can be used.

# Model parameter group

model_parameters:

# Original Caffe floating-point model description file

prototxt: '***.prototxt'

# Original Caffe floating-point model data model file

caffe_model: '****.caffemodel'

# Original ONNX floating-point model file

onnx_model: '****.onnx'

# Target processor architecture for conversion, keep the default value, bernoulli2 for D-Robotics RDK X3 and bayes for RDK Ultra

march: 'bernoulli2'

# Prefix of the model file for execution on the board after conversion

output_model_file_prefix: 'mobilenetv1'

# Directory for storing the output of the model conversion

working_dir: './model_output_dir'

# Specify whether the converted hybrid heterogeneous model retains the ability to output intermediate results of each layer, keep the default value

layer_out_dump: False

# Specify the output nodes of the model

output_nodes: `{`OP_name`}`

# Batch deletion of nodes of a certain typeremove_node_type: Dequantize

# Remove specified node by name

remove_node_name: `{`OP_name`}`

# Input parameters group

input_parameters:

# Name of the input node in the original floating-point model

input_name: "data"

# Data format of the input to the original floating-point model (same number and order as input_name)

input_type_train: 'bgr'

# Data layout of the input to the original floating-point model (same number and order as input_name)

input_layout_train: 'NCHW'

# Shape of the input to the original floating-point model

input_shape: '1x3x224x224'

# Batch size given to the network during execution, default value is 1

input_batch: 1

# Pre-processing method applied to the input data in the model

norm_type: 'data_mean_and_scale'

# Mean value subtracted from the image in the pre-processing method, if channel means, values must be separated by spaces

mean_value: '103.94 116.78 123.68'

# Scale value applied to the image in the pre-processing method, if channel scales, values must be separated by spaces

scale_value: '0.017'

# Data format that the transformed hybrid heterogeneous model needs to adapt to (same number and order as input_name)

input_type_rt: 'yuv444'

# Special format of the input data

input_space_and_range: 'regular'

# Data layout that the transformed hybrid heterogeneous model needs to adapt to (same number and order as input_name), not required if input_type_rt is nv12

input_layout_rt: 'NHWC'

# Calibration parameters group

calibration_parameters:

# Directory where the calibration samples for model calibration are stored

cal_data_dir: './calibration_data'

# Data storage type of the binary files for calibration data

cal_data_type: 'float32'

# Enable automatic processing of calibration image samples (using skimage read and resize to input node size)

#preprocess_on: False# Algorithm type for calibration, with default calibration algorithm as the first priority

calibration_type: 'default'

# Parameters for max calibration mode

# max_percentile: 1.0

# Force the OP to run on CPU, generally not needed, can be enabled during model accuracy tuning phase for precision optimization

# run_on_cpu: {OP_name}

# Force the OP to run on BPU, generally not needed, can be enabled during model performance tuning phase for performance optimization

# run_on_bpu: {OP_name}

# Specify whether to calibrate for each channel

# per_channel: False

# Specify the data precision for output nodes

# optimization: set_model_output_int8

# Compiler parameter group

compiler_parameters:

# Compilation strategy selection

compile_mode: 'latency'

# Whether to enable debug information for compilation, keep the default False

debug: False

# Number of cores for model execution

core_num: 1

# Optimization level for model compilation, keep the default O3

optimize_level: 'O3'

# Specify the input data source as 'pyramid' for the input named 'data'

#input_source: {"data": "pyramid"}

# Specify the maximum continuous execution time for each function call in the model

#max_time_per_fc: 1000

# Specify the number of processes during model compilation

#jobs: 8

# This parameter group does not need to be configured, only enabled when there are custom CPU operators

#custom_op:

# Calibration method for custom OP, recommend using registration method

#custom_op_method: register

# Implementation files for custom OP, multiple files can be separated with ";", this file can be generated from a template, see the custom OP documentation for details

#op_register_files: sample_custom.py# The folder where the custom OP implementation file is located, please use a relative path

#custom_op_dir: ./custom_op

The configuration file mainly includes four parameter groups: model parameter group, input information parameter group, calibration parameter group, and compilation parameter group.

In your configuration file, all four parameter groups need to exist, and specific parameters can be optional or mandatory. Optional parameters can be omitted.

The specific format for setting parameters is: param_name: 'param_value' ;

If there are multiple values for a parameter, separate each value with the ';' symbol: param_name: 'param_value1; param_value2; param_value3' ;

For specific configuration methods, please refer to: run_on_cpu: 'conv_0; conv_1; conv12' .

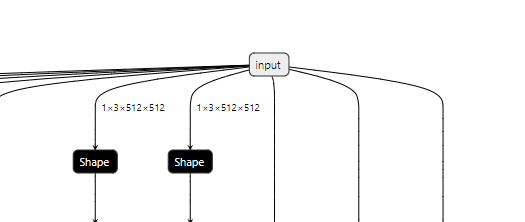

- When the model is a multi-input model, it is recommended to explicitly specify optional parameters such as

input_nameandinput_shapeto avoid errors in parameter correspondence order. - When configuring the

marchas bayes, which means performing RDK Ultra model conversion, if you configure the optimization leveloptimize_levelas O3, hb_mapper makerbin will automatically provide caching capabilities. That is, when you use hb_mapper makerbin to compile the model for the first time, it will automatically create a cache file. In subsequent compilations with the same working directory, this file will be automatically called, reducing your compilation time.

- Please note that if you set

input_type_rttonv12oryuv444, the input size of the model cannot have odd numbers. - Please note that currently RDK X3 does not support the combination of

input_type_rtasyuv444andinput_layout_rtasNCHW. - After the model conversion is successful, if an OP that meets the constraints of BPU operators still runs on the CPU, the main reason is that the OP belongs to the passive quantization OP. For information about passive quantization, please read the section Active and Passive Quantization Logic in the Algorithm Toolchain.

The following is a description of the specific parameter information. There will be many parameters, and we will introduce them in the order of the parameter groups mentioned above.

-

Model Parameter Group

| Parameter Name | Description | Value Range | Optional/Required |

|---|---|---|---|

prototxt | Purpose: Specifies the filename of the Caffe float model prototxt file. Description: Mandatory for hb_mapper makertbin with model-type set to caffe. | N/A | Optional |

caffe_model | Purpose: Specifies the filename of the Caffe float model caffemodel file. Description: Mandatory for hb_mapper makertbin with model-type set to caffe. | N/A | Optional |

onnx_model | Purpose: Specifies the filename of the ONNX float model onnx file. Description: Mandatory for hb_mapper makertbin with model-type set to onnx. | N/A | Optional |

march | Purpose: Specifies the platform architecture supported by the mixed-heterogeneous model to be produced. Description: Two available options correspond to the BPU micro-framework for RDK X3 and RDK Ultra. Choose based on your platform. | bernoulli2 or bayes | Required |

output_model_file_prefix | Purpose: Specifies the prefix for the converted mixed-heterogeneous model's output file name. Description: Prefix for the output integer model file name. | N/A | Required |

working_dir | Purpose: Specifies the directory where the model conversion output will be stored. Description: If the directory does not exist, the tool will automatically create it. | N/A | Optional (default: model_output) |

layer_out_dump | Purpose: Enables the ability to retain intermediate layer values in the mixed-heterogeneous model. Description: Intermediate layer values are used for debugging purposes. Disable this in normal scenarios. | True or False | Optional (default: False) |

output_nodes | Purpose: Specifies the model's output nodes. Description: Generally, the conversion tool automatically identifies the model's output nodes. This parameter is used to support specifying some intermediate layers as outputs. Provide specific node names, following the same format as the param_value description. Note that setting this parameter prevents the tool from automatically detecting outputs; the nodes you specify become the entire output. | N/A | Optional |

remove_node_type | Purpose: Sets the type of nodes to remove. Description: Hidden parameter, not setting or leaving blank won't affect the model conversion process. This parameter is used to support specifying node types to delete. Removed nodes must appear at the beginning or end of the model, connected to inputs or outputs. Caution: Nodes will be deleted in order, dynamically updating the model structure. The tool checks if a node is at an input or output before deletion. Order matters. | "Quantize", "Transpose", "Dequantize", "Cast", "Reshape". Separate by ";". | Optional |

remove_node_name | Purpose: Sets the name of nodes to remove. Description: Hidden parameter, not setting or leaving blank won't affect the model conversion process. This parameter is used to support specifying node names to delete. Removed nodes must appear at the beginning or end of the model, connected to inputs or outputs. Caution: Nodes will be deleted in order, dynamically updating the model structure. The tool checks if a node is at an input or output before deletion. Order matters. | N/A | Optional |

set_node_data_type | Purpose: Configures the output data type of a specific op as int16, only supported for RDK Ultra configuration! Description: In the model conversion process, most ops default to int8 for input and output data types. This parameter allows you to specify the output data type of a specific op as int16 under certain constraints. See the int16 configuration details for more information. Note: This functionality has been merged into the node_info parameter, which will be deprecated in future versions. | Supported operators listed in the model operator support list for RDK Ultra. | Optional |

debug_mode | Purpose: Saves calibration data for precision debugging analysis. Description: This parameter saves calibration data for precision debugging analysis in .npy format. This data can be directly loaded into the model for inference. If not set, you can save the data yourself and use the precision debugging tool for analysis. | "dump_calibration_data" | Optional |

node_info | Purpose: Supports configuring the input and output data types of specific ops as int16, and forces certain ops to run on CPU or BPU. Only supported for RDK Ultra configuration! Description: To reduce YAML parameters, we've combined the capabilities of set_node_data_type, run_on_cpu, and run_on_bpu into this parameter and expanded it to support configuring the input data type of specific ops as int16.Usage of node_info:- Run an op on BPU/CPU (example with BPU): node_info: {"node_name": {"ON": "BPU", }}- Run an op on BPU and configure its input and output data types: node_info: {"node_name": {"ON": "BPU", "InputType": "int16", "OutputType": "int16" }}- Run on multiple operators: node_info: {"/model.0/conv/Conv": {"ON": "BPU","InputType": "int16","OutputType": "int16"},"/model.0/act/Mul": {"ON": "BPU","InputType": "int16","OutputType": "int16"},"/model.2/Concat": {"ON": "BPU","InputType": "int16","OutputType": "int16"}}* InputType: 'int16' applies to all inputs. For specifying a particular input's data type, append a number, e.g., 'InputType0': 'int16' for the first input, 'InputType1': 'int16' for the second input.* OutputType doesn't support specifying a particular output, applying to all outputs. It doesn't support individual types like OutputType0 or OutputType1.Value Range: Refer to the model operator support list for RDK Ultra for supported int16 ops and those that can run on CPU or BPU. Default Configuration: None | Optional |

-

Input Parameters Group

| Parameter Name | Description | Value Range | Optional/Required |

|---|---|---|---|

input_name | Purpose: Specifies the input node name of the original floating-point model. Description: Not required when the floating-point model has only one input node. Must be configured for models with multiple input nodes to ensure accurate order of type and calibration data inputs. Multiple values can be set as described for param_value. | N/A | Optional |

input_type_train | Purpose: Specifies the input data type of the original floating-point model. Description: Each input node must have a configured data type. For models with multiple input nodes, the order should match that in input_name. Multiple values can be set as described for param_value. Data types available: refer to the explanation in the "Conversion Internal Process" section. | Values: rgb, bgr, yuv444, gray, featuremap | Required |

input_layout_train | Purpose: Specifies the input data layout of the original floating-point model. Description: Each input node requires a specific layout, which must match the model's original layout. Order should align with input_name. Multiple values can be set as described for param_value. Learn more about layouts in the "Conversion Internal Process" section. | Values: NHWC, NCHW | Required |

input_type_rt | Purpose: The input format needed for the converted heterogeneous model. Description: Specifies the desired input format, not necessarily matching the original model's format, but important for the platform to feed data into the model. One type per input node, with order matching input_name. Multiple values can be set as described for param_value. Data types available: refer to the explanation in the "Conversion Internal Process" section. | Values: rgb, bgr, yuv444, nv12, gray, featuremap | Required |

input_layout_rt | Purpose: The input data layout for the converted heterogeneous model. Description: Specifies the desired input layout for each node, which can differ from the original model. For NV12 input_type_rt, this parameter is unnecessary. Order should match input_name. Multiple values can be set as described for param_value. Learn more about layouts in the "Conversion Internal Process" section. | Values: NCHW, NHWC | Optional (if input_type_rt is NV12) |

input_space_and_range | Purpose: Specifies the special format of input data, particularly for ISP outputs in yuv420 format. Description: Used for adapting to different ISP formats, valid only if input_type_rt is set to nv12. Choices: regular for common yuv420, bt601_video for another video standard. Keep as regular unless specifically needed. | Values: regular, bt601_video | Optional |

input_shape | Purpose: Specifies the dimensions of the input data for the original floating-point model. Description: Dimensions should be separated by x, e.g., 1x3x224x224. Can be omitted for single-input models with the tool automatically reading size information from the model file. Order should match input_name. Multiple values can be set as described for param_value. | N/A | Optional |

input_batch | Purpose: The number of batches for the converted heterogeneous model to adapt to. Description: The batch size for the converted heterogeneous bin model, not affecting the ONNX model's batch size. Defaults to 1 if not specified. Only applicable for single-input models where the first dimension of input_shape is 1. | Range: 1-128 | Optional |

norm_type | Purpose: The preprocessing method added to the model's input data. Description: no_preprocess means no preprocessing; data_mean for mean subtraction; data_scale for scaling; data_mean_and_scale for both mean subtraction and scaling. Must be consistent with the order of input_name. Multiple values can be set as described for param_value. See the "Conversion Internal Process" section for impact. | Values: data_mean_and_scale, data_mean, data_scale, no_preprocess | Required |

mean_value | Purpose: The image mean value for the preprocessing method. Description: Required if norm_type includes data_mean_and_scale or data_mean. Two configuration options: a single value for all channels or channel-specific values (separated by spaces). Channel count should match norm_type nodes. Set to 'None' for nodes without mean processing. Multiple values can be set as described for param_value. | N/A | Optional |

scale_value | Purpose: The scale coefficient for the preprocessing method. Description: Required if norm_type includes data_mean_and_scale or data_scale. Similar to mean_value, two configurations are allowed: a single value for all channels or channel-specific values (separated by spaces). Channel count should match norm_type nodes. Set to 'None' for nodes without scale processing. Multiple values can be set as described for param_value. | N/A | Optional |

-

Calibration Parameter Group

| Parameter Name | Description | Value Range | Optional/Required |

|---|---|---|---|

cal_data_dir | Specifies the directory containing calibration samples for model calibration. Description: The data in this directory should adhere to the input configuration requirements. Please refer to the section on Preparing Calibration Data for more details. When configuring multiple input nodes, the order of the specified nodes must strictly match that in input_name. Multiple value configurations can be done as described earlier for param_value. For calibration_type of load, skip, this parameter is not needed. Note: To facilitate your use, if no cal_data_type configuration is found, we will infer the data type based on the file extension. If the file extension ends with _f32, it will be considered float32; otherwise, uint8. However, we strongly recommend constraining the data type using the cal_data_type parameter. | N/A | Optional |

cal_data_type | Specifies the binary file data storage type for calibration data. Description: The data storage type used by the model during calibration. If not specified, the tool will determine the type based on the file name suffix. | float32, uint8 | Optional |

preprocess_on | Enables automatic preprocessing of image calibration samples. Description: This option is only applicable to models with 4D image inputs. Do not enable this for non-4D models. When enabled, the tool reads jpg/bmp/png files in the cal_data_dir and resizes them to the required dimensions for input nodes. It is recommended to keep this parameter disabled to ensure calibration accuracy. Refer to the Preparing Calibration Data section for more information on its impact. | True, False | Optional |

calibration_type | Calibration algorithm type to use. Description: Both kl and max are public calibration quantization algorithms, whose basic principles can be found in online resources. When using load, the QAT model must be exported using a plugin. mix is an integrated search strategy that automatically determines sensitive quantization nodes and selects the best method at the node granularity, ultimately constructing a calibration combination that leverages the advantages of multiple methods. default is an automated search strategy that attempts to find a relatively better combination of calibration parameters from a series. We suggest starting with default. If the final accuracy does not meet expectations, refer to the Precision Tuning section for suggested parameter adjustments. If you just want to verify the model performance without accuracy requirements, try the skip mode, which uses random numbers for calibration and does not require calibration data, suitable for initial model structure validation. Note: Using the skip mode results in models calibrated with random numbers, which are not suitable for accuracy validation. | default, mix, kl, max, load, skip | Required |

max_percentile | Parameter for the max calibration method, used to adjust the cutoff point for max calibration.Description: Only valid when calibration_type is set to max. Common options include: 0.99999/0.99995/0.99990/0.99950/0.99900. Start with calibration_type set to default, and adjust this parameter if the final accuracy is unsatisfactory, as advised in the Precision Tuning section. | 0.0 - 1.0 | Optional |

per_channel | Controls whether to calibrate each channel individually within a featuremap. Description: Effective when calibration_type is not set to default. Start with default and adjust this parameter if necessary, as suggested in the Precision Tuning section. | True, False | Optional |

run_on_cpu | Forces operators to run on CPU. Description: Although CPU performance is inferior to BPU, it provides float precision calculations. Specify this parameter if you're certain that some operators need to run on CPU. Set values to specific node names in your model, following the same configuration method as described earlier for param_value. Note: In RDK Ultra, this parameter functionality has been merged into the node_info parameter and is planned to be deprecated in future versions. It continues to be available in RDK X3. | N/A | Optional |

run_on_bpu | Forces an operator to run on BPU. Description: To maintain the accuracy of the quantized model, occasionally, the conversion tool may place some operators that can run on BPU on CPU. If you have higher performance requirements and are willing to accept slightly more quantization loss, you can explicitly specify that an operator runs on BPU. Set values to specific node names in your model, following the same configuration method as described earlier for param_value. Note: In RDK Ultra, this parameter functionality has been merged into the node_info parameter and is planned to be deprecated in future versions. It continues to be available in RDK X3. | N/A | Optional |

optimization | Sets the model output format to int8 or int16. Description: If set to set_model_output_int8, the model will output in low-precision int8 format; if set to set_model_output_int16, the model will output in low-precision int16 format. Note: RDK X3 only supports set_model_output_int8, while RDK Ultra supports both set_model_output_int8 and set_model_output_int16. | set_model_output_int8, set_model_output_int16 | Optional |

-

Compiler Parameters

{#compiler_parameters}

| Parameter Name | Description | Value Range | Optional/Required |

|---|---|---|---|

compile_mode | Purpose: Select the compilation strategy. Description: Choose between latency for inference time optimization or bandwidth for DDR access bandwidth optimization. For models without significant bandwidth exceedance, use the latency strategy is recommended. | Value Range: latency, bandwidth.Default: latency. | Required |

debug | Purpose: Enable debug information in the compilation process. Description: Enabling this parameter saves performance analysis results in the model, allowing you to view layer-wise BPU operator performance (including compute, compute time, and data movement time) in the generated static performance assessment files. It is recommended to keep it disabled by default. | Value Range: True, False.Default: False. | Optional |

core_num | Purpose: Number of cores for model execution. Description: D-Robotics Platform supports using multiple AI accelerator cores simultaneously for inference tasks. Multiple cores are beneficial for larger input sizes, with double-core speed typically around 1.5 times that of single-core. If your model has large inputs and追求极致速度, set core_num=2. Note: This option is not supported for RDK Ultra, please do not configure. | Value Range: 1, 2.Default: 1. | Optional |

optimize_level | Purpose: Model compilation optimization level. Description: The optimization levels range from O0 (no optimization, fastest compile) to O3 (higher optimization, slower compile). Normal performance models should use O3 for optimal performance. Lower levels can be used for faster development or debugging processes. | Value Range: O0, O1, O2, O3.Default: None. | Required |

input_source | Purpose: Set the source of input data for the on-board bin model. Description: This parameter is for engineering environment compatibility. Configure after model validation. Options include ddr (memory), pyramid, and resizer. Note: If set to resizer, the model's h*w should be less than 18432. In an engineering environment, adapting pyramid and resizer sources requires specific configuration, e.g., if the model input name is data and the source is memory (ddr), set as {`"data": "ddr"`}. | Value Range: ddr, pyramid, resizerDefault: None (auto-selected based on input_type_rt). | Optional |

max_time_per_fc | Purpose: Maximum continuous execution time per function call (in us). Description: In the compiled data instruction model, each inference on BPU is represented by one or more function calls (BPU execution granularity). A value of 0 means no limit. This parameter limits the max execution time per function call, allowing the model to be interrupted if necessary. See the section on Model Priority Control for details. - This parameter is for implementing model preemption; ignore if not needed. - Model preemption is only supported on development boards, not PC simulators. | Value Range: 0 or 1000-4294967295.Default: 0. | Optional |

jobs | Purpose: Number of processes for compiling the bin model. Description: Sets the number of processes during bin model compilation, potentially improving compile speed. | Value Range: Up to the maximum number of supported cores on the machine. Default: None. | Optional |

-

Custom Operator Parameter Group

| Parameter Name | Description of Configuration | Range of Values | Optional/Mandatory |

|---|---|---|---|

custom_op_method | Purpose: Select strategy for custom operator. Description: Currently, only the 'register' strategy is supported. | Range: register.Default: None. | Optional |

op_register_files | Purpose: Names of Python files implementing the custom operator(s). Description: Multiple files can be separated by ;. | Range: None. Default: None. | Optional |

custom_op_dir | Purpose: Path to the directory containing the Python files for the custom operator(s). Description: Please use relative path when setting the path. | Range: None. Default: None. | Optional |

RDK Ultra int16 Configuration Instructions {#int16_config}

In the process of model conversion, most operators in the model will be quantized to int8 for computation. By configuring the "node_info" parameter, you can specify in detail that the input/output data type of a specific op is int16 for computation (the specific supported operator range can be referred to the RDK Ultra operator support list in the "Supported Operator List" chapter). The basic principle is as follows:

After you configure the input/output data type of a certain op as int16, the model conversion will automatically update and check the int16 configuration of the op's input/output context. For example, when configuring the input/output data type of op_1 as int16, it actually implicitly specifies that the previous/next op of op_1 needs to support int16 computation. For unsupported scenarios, the model conversion tool will print a log to indicate that the int16 configuration combination is temporarily unsupported and will fallback to int8 computation.

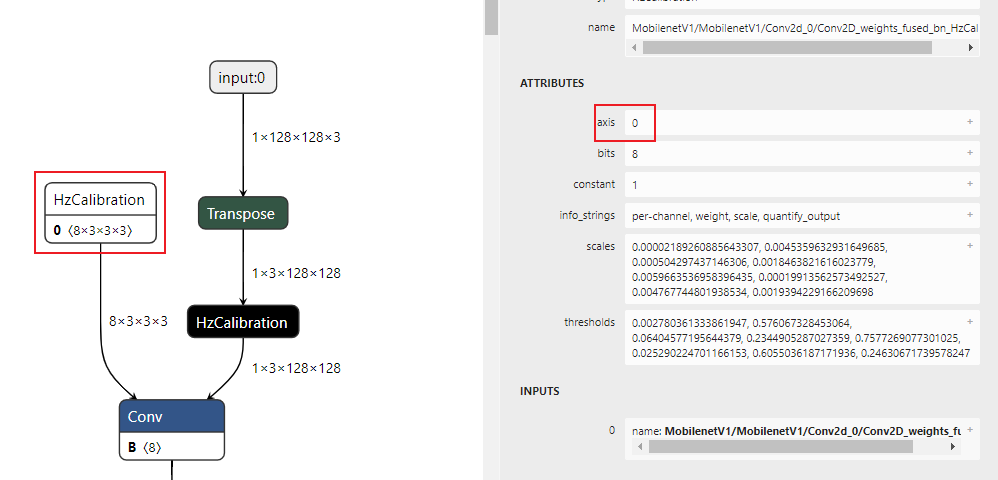

Pre-processing HzPreprocess Operator Instructions {#pre_process}

The pre-processing HzPreprocess operator is a pre-processing operator node inserted after the model input node during the model conversion process of D-Robotics Model Conversion Tool. It is used to normalize the input data of the model. This section mainly introduces the parameters "norm_type", "mean_value", "scale_value", and the explanation of the HzPreprocess operator node generated by the model pre-processing.

norm_type Parameter Explanation

-

Parameter Function: This parameter is used to add the input data pre-processing method to the model.

-

Parameter Value Range and Explanation:

- "no_preprocess" indicates no data pre-processing is added.

- "data_mean" indicates subtraction of mean value pre-processing.is provided.

- "data_scale" indicates multiplication by scale factor pre-processing.

- "data_mean_and_scale" indicates subtraction of mean value followed by multiplication by scale factor pre-processing.

When there are multiple input nodes, the order of the set nodes must strictly match the order in "input_name".

mean_value Parameter Explanation

-

Parameter Function: This parameter represents the mean value subtracted from the image for the specified pre-processing method.

-

Usage: This parameter needs to be configured when "norm_type" is set to "data_mean_and_scale" or "data_mean".

-

Parameter Explanation:

- When there is only one input node, only one value needs to be configured, indicating that all channels will subtract this mean value.

- When there are multiple nodes, provide values that match the number of channels (these values are separated by spaces), indicating that each channel will subtract a different mean value.

- The number of configured input nodes must match the number of nodes configured in "norm_type".

- If there is a node that does not require mean processing, configure it as "None".

scale_value Parameter Explanation

-

Parameter Function: This parameter represents the scale factor for the specified pre-processing method.

-

Usage: This parameter needs to be configured when "norm_type" is set to "data_mean_and_scale" or "data_scale".- Parameter description:

- When there is only one input node, only one value needs to be configured, which represents the scaling factor for all channels.

- When there are multiple nodes, provide the same number of values as the number of channels (these values are separated by spaces), which represents different scaling factors for each channel.

- The number of configured input nodes must be consistent with the number of nodes configured for

norm_type. - If there is a node that does not require

scaleprocessing, configure it as'None'.

Formula and example explanation

- Formula for data normalization during model training

The mean and scale parameters in the YAML file need to be calculated based on the mean and std during training.

The calculation formula for data normalization in the preprocessing node (i.e. in the HzPreprocess node) is norm_data = (data - mean) * scale.

Taking yolov3 as an example, the preprocessing code during training is as follows:

def base_transform(image, size, mean, std):

x = cv2.resize(image, (size, size).astype(np.float32))

x /= 255

x -= mean

x /= std

return x

class BaseTransform:

def __init__(self, size, mean=(0.406, 0.456, 0.485), std=(0.225, 0.224, 0.229)):

self.size = size

self.mean = np.array(mean, dtype=np.float32)

self.std = np.array(std, dtype=np.float32)

The formula becomes: norm_data = (\fracdata{`255`}` - mean) * \frac`{`1`}std``,

Rewritten as the calculation method in the HzPreprocess node: norm_data = (\fracdata{`255`}` - mean) * \frac`{`1`}std = (data - 255mean) * \frac1{`255std`},

Therefore: mean_yaml = 255 mean, scale_yaml = \frac1{`255std`}.

- Formula during model inference

By configuring the parameters in the YAML configuration file, whether to add the HzPreprocess node is determined. When configuring mean/scale, when performing model conversion, a HzPreprocess node will be added to the input, which can be understood as performing a convolution operation on the input data.

The calculation formula in the HzPreprocess node is: ((input (range [-128,127]) + 128) - mean) * scale, where weight = scale, bias = (128 - mean) * scale.

- After adding mean/scale in the YAML, there is no need to include MeanTransformer and ScaleTransformer in the preprocessing.

- Adding mean/scale in the YAML will place the parameters within the HzPreprocess node, which is a BPU (Base Processing Unit) node.

Conversion Internal Process Interpretation

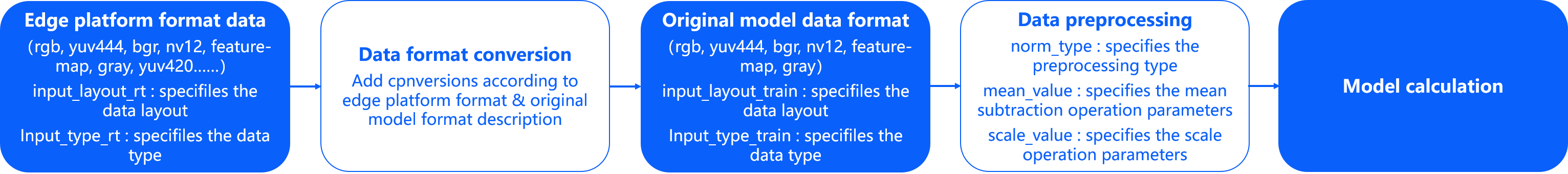

During the model conversion stage, the floating-point model is transformed into the D-Robotics mixed heterogeneous model. In order to efficiently run this heterogeneous model on embedded devices, the model conversion focuses on solving two key issues: input data processing and model optimization compilation. This section will discuss these two key issues in detail.

Input Data Processing: The D-Robotics X3 processor provides hardware-level support for certain types of model input pathways. For example, in the case of the video pathway, the video processing subsystem provides functions such as image cropping, scaling, and other image quality optimization for image acquisition. The output of these subsystems is in the YUV420 NV12 format, while the algorithm models are typically trained on more common image formats such as BGR/RGB.

D-Robotics provides the following solutions for this situation:

-

Each converted model provides two types of descriptions: one for describing the input data of the original floating-point model (

input_type_trainandinput_layout_train), and the other for describing the input data of the processor we need to interface with (input_type_rtandinput_layout_rt). -

Mean/scale operations on image data are also common, but these operations are not suitable for the data formats supported by processors such as YUV420 NV12. Therefore, we have embedded these common image preprocessing operations into the model.

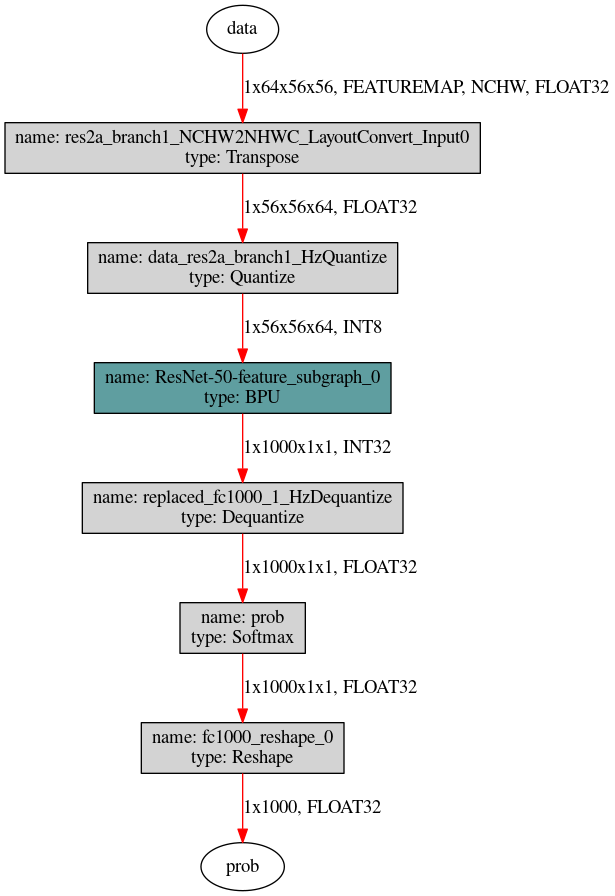

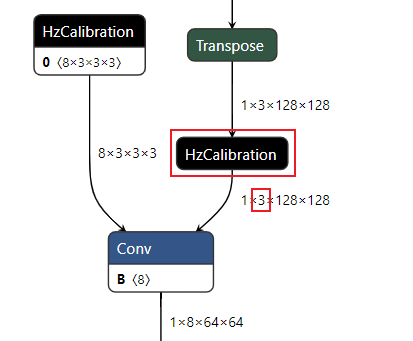

After processing through the above two methods, the input part of the heterogeneous model ***.bin generated during the model conversion stage will look like the following:

The data layouts shown in the above figure include NCHW and NHWC, where N represents the number, C represents the channel, H represents the height, and W represents the width. The two different layouts reflect different memory access characteristics. NHWC is more commonly used in TensorFlow models, while NCHW is used in Caffe. The D-Robotics processor does not restrict the use of data layouts, but there are two requirements: first, input_layout_train must be consistent with the data layout of the original model; second, prepare the data with the data layout consistent with input_layout_rt on the processor, as the correct data layout is the basis for successful data parsing.

The model conversion tool will automatically add data conversion nodes based on the data formats specified by input_type_rt and input_type_train. According to D-Robotics's actual usage experience, not all possible type combinations are needed. To prevent misuse, we only provide a few fixed type combinations, as shown in the table below:

input_type_train \ input_type_rt | nv12 | yuv444 | rgb | bgr | gray | featuremap |

|---|---|---|---|---|---|---|

| yuv444 | Y | Y | N | N | N | N |

| rgb | Y | Y | Y | Y | N | N |

| bgr | Y | Y | Y | Y | N | N |

| gray | N | N | N | N | Y | N |

| featuremap | N | N | N | N | N | Y |

The first row in the table represents the types supported by input_type_rt, and the first column represents the types supported by input_type_train. The Y/N indicates whether the conversion from input_type_rt to input_type_train is supported. In the final produced bin model after model conversion, the conversion from input_type_rt to input_type_train is an internal process, so you only need to pay attention to the data format of input_type_rt. Understanding the requirements of each input_type_rt is important for preparing inference data for embedded applications. The following are explanations of each format of input_type_rt:

-

RGB, BGR, and gray are common image formats, and each value is represented by UINT8.

-

YUV444 is a common image format, and each value is represented by UINT8.

-

NV12 is a common YUV420 image format, and each value is represented by UINT8.

-

A special case of nv12 is when "input_space_and_range" is set to "bt601_video" (refer to the previous description of the "input_space_and_range" parameter). In contrast to the regular nv12 case, the value range in this case changes from [0,255] to [16,235], and each value is still represented by UINT8.

-

The data format type for the input feature map of the model only requires the data to be four-dimensional, and each value is represented by float32. For example, models processing radar and audio often use this format.

The calibration data only needs to be processed until input_type_train, and be careful not to perform duplicate normalization operations.

The "input_type_rt" and "input_type_train" are fixed in the algorithm toolchain's processing flow. If you are certain that no conversion is needed, you can set both "input_type" configurations to be the same. This way, "input_type" will be treated as a pass-through without affecting the actual execution performance of the model.

Similarly, the data preprocessing is also fixed in the flow. If you don't need any preprocessing, you can disable this feature by configuring norm_type, without affecting the actual execution performance of the model.

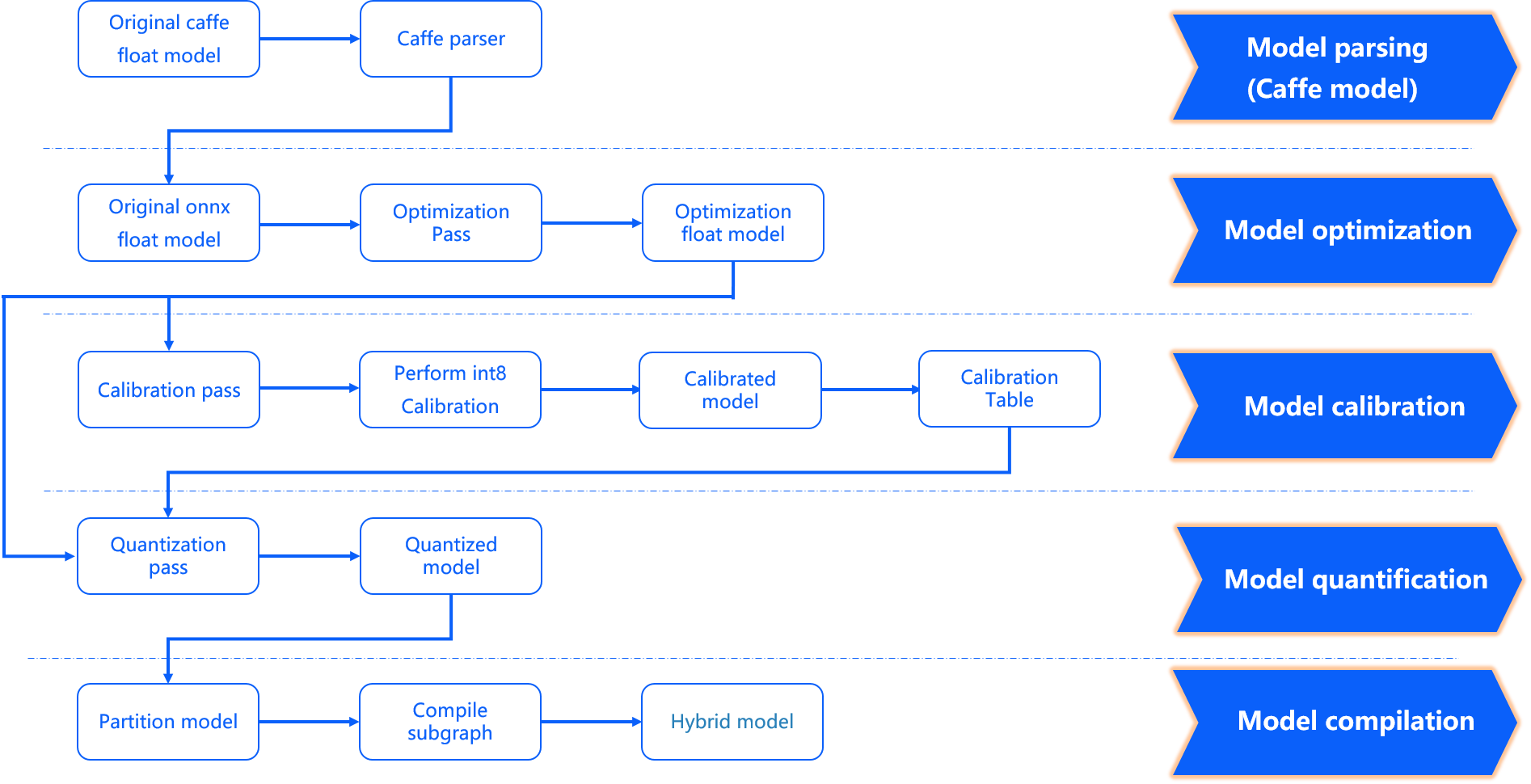

The model optimization compilation completes several important stages, including model parsing, model optimization, model calibration and quantization, and model compilation. The internal workflow is shown in the following diagram:

- "input_type_rt*" represents the intermediate format of input_type_rt.

- The X3 processor architecture only supports inference with "NHWC" data. Please use the visualization tool Netron to view the data layout of the input nodes in the "quantized_model.onnx" and decide whether to add "layout conversion" in the preprocessing.

In the model parsing stage, for a Caffe floating-point model, it will be transformed into an ONNX floating-point model. The original floating-point model will determine whether to include a data preprocessing node based on the configuration parameters in the transformation configuration YAML file. This stage produces an "original_float_model.onnx". This ONNX model still has a calculation precision of float32, but it includes a data preprocessing node in the input part.

Ideally, this preprocessing node should complete the complete conversion from "input_type_rt" to "input_type_train". In reality, the entire type conversion process will be completed in collaboration with the D-Robotics processor hardware. The ONNX model does not include the hardware conversion. Therefore, the real input type of ONNX will use an intermediate type, which is the result type of the hardware processing of "input_type_rt". The data layout (NCHW/NHWC) will remain consistent with the input layout of the original floating-point model. Each "input_type_rt" has a specific corresponding intermediate type, as shown in the following table:

| nv12 | yuv444 | rgb | bgr | gray | featuremap |

|---|---|---|---|---|---|

| yuv444_128 | yuv444_128 | RGB_128 | BGR_128 | GRAY_128 | featuremap |

The bold part in the table is the data type specified by "input_type_rt", and the second row represents the specific intermediate type corresponding to a specific "input_type_rt". This intermediate type is the input type of the "original_float_model.onnx". Each type is explained as follows:

- yuv444_128 represents yuv444 data subtracting 128, and each value is represented by int8.

- RGB_128 represents RGB data subtracting 128, and each value is represented by int8.

- BGR_128 represents BGR data subtracting 128, and each value is represented by int8.

- GRAY_128 represents gray data subtracting 128, and each value is represented by int8.- featuremap is a four-dimensional tensor data, and each value is represented as float32.

Model Optimization Phase implements some optimization strategies for the model that are suitable for the D-Robotics platform, such as BN fusion into Conv. The output of this phase is an optimized_float_model.onnx file. The computational precision of this ONNX model is still float32, and the optimization will not affect the computational results of the model. The requirements for the input data of the model are still consistent with the original_float_model mentioned earlier.

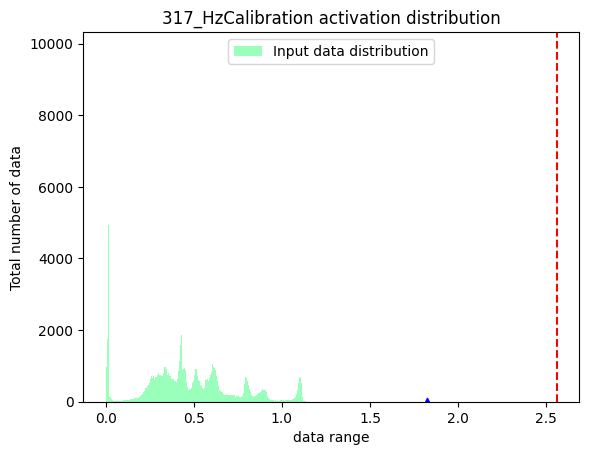

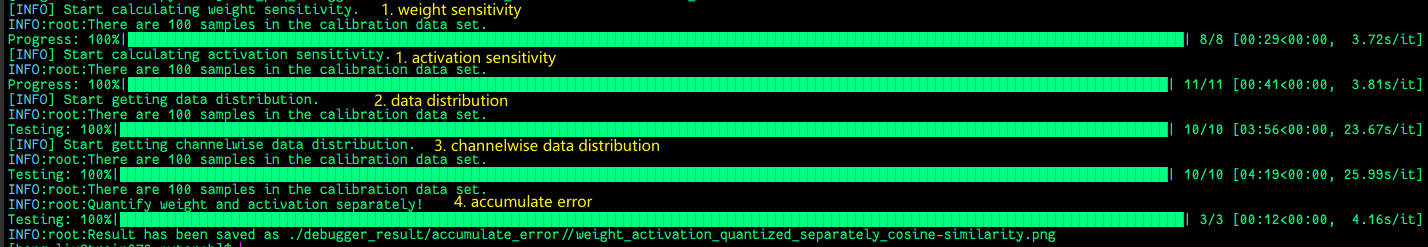

Model Calibration Phase uses the calibration data provided by you to calculate the necessary quantization threshold parameters. These parameters will be directly input into the quantization phase without generating new model states.

Model Quantization Phase uses the parameters obtained from calibration to complete model quantization. The output of this phase is a quantized_model.onnx file. The computational precision of this model is int8, and using this model can evaluate the accuracy loss caused by quantization. The basic data format and layout of the input for this model remain the same as the original_float_model, but the layout and numerical representation have changed. The overall changes compared to the original_float_model input are described as follows:

- The data layout of "RDK X3" is NHWC.

- When the value of "input_type_rt" is non-"featuremap", the data type of the input is INT8. Conversely, when the value of "input_type_rt" is "featuremap", the data type of the input is float32.

The relationship between the data layout is as follows:

- Original model input layout: NCHW.

- input_layout_train: NCHW.

- origin.onnx input layout: NCHW.

- calibrated_model.onnx input layout: NCHW.

- quanti.onnx input layout: NHWC.

That is, the input layout of input_layout_train, origin.onnx, and calibrated_model.onnx is consistent with the original model input layout.

Please note that if input_type_rt is nv12, the input layout of quanti.onnx is NHWC.

Model Compilation Phase uses the D-Robotics model compiler to convert the quantized model into the computation instructions and data supported by the D-Robotics platform. The output of this phase is a *.bin model, which is the model that can be run on the D-Robotics embedded platform, and it is the final output of the model conversion.

Interpretation of Conversion Results

This section will introduce the interpretation of the successful conversion status and the analysis methods for unsuccessful conversions. To confirm the successful model conversion, you need to confirm from three aspects: the "makertbin" status information, similarity information, and the "working_dir" output. Regarding the "makertbin" status information, the console will output a clear prompt message at the end of the information when the conversion is successful, as follows:

2021-04-21 11:13:08,337 INFO Convert to runtime bin file successfully!

2021-04-21 11:13:08,337 INFO End Model Convert

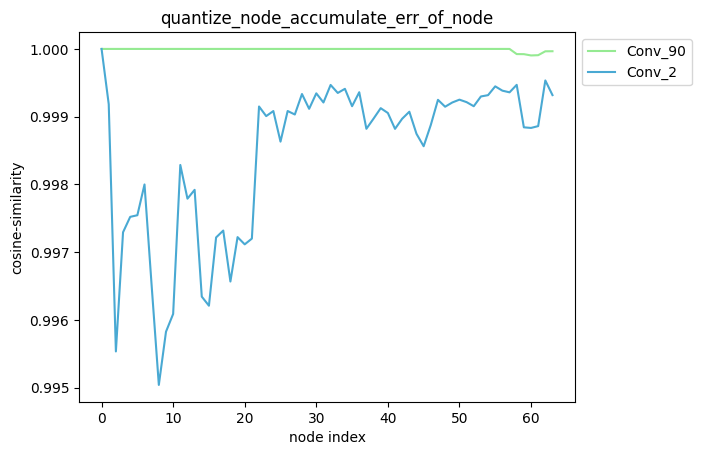

The similarity information also exists in the console output of "makertbin". Before the "makertbin" status information, its content is in the following format:

======================================================================

Node ON Subgraph Type Cosine Similarity Threshold

``````bash

…… …… …… …… 0.999936 127.000000

…… …… …… …… 0.999868 2.557209

…… …… …… …… 0.999268 2.133924

…… …… …… …… 0.996023 3.251645

…… …… …… …… 0.996656 4.495638

The output content listed above, Nodes, ON, Subgraph and Type are consistent with the interpretation of the hb_mapper checker tool, please refer to the previous section Check Results;

Threshold is the calibration threshold for each layer, which is used to provide feedback to D-Robotics technical support in abnormal conditions, and does not need to be paid attention to under normal conditions;

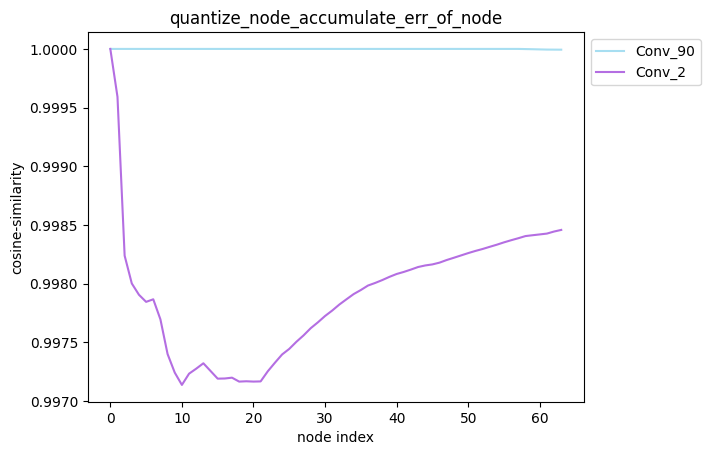

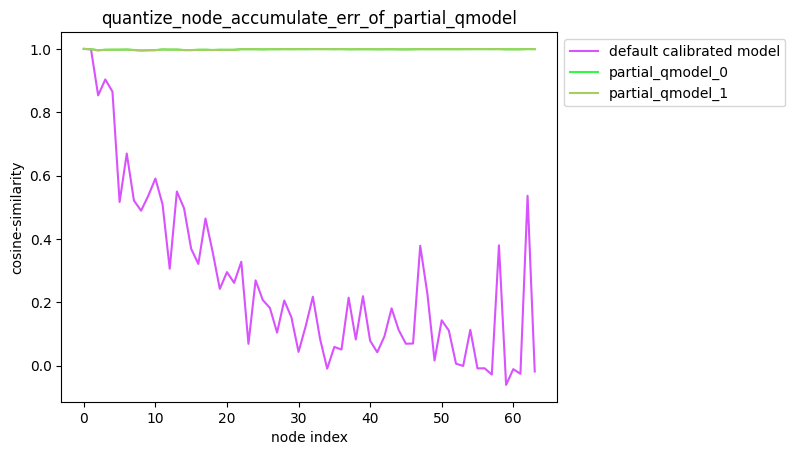

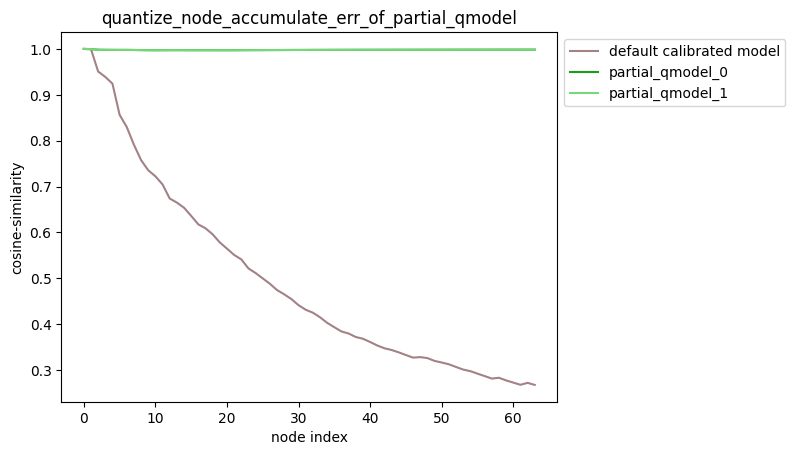

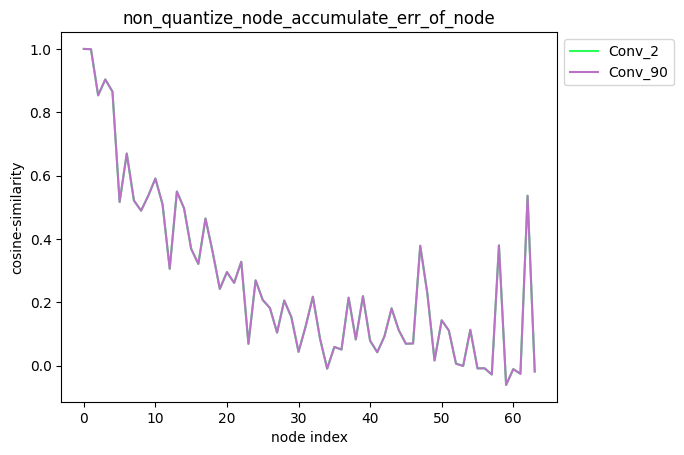

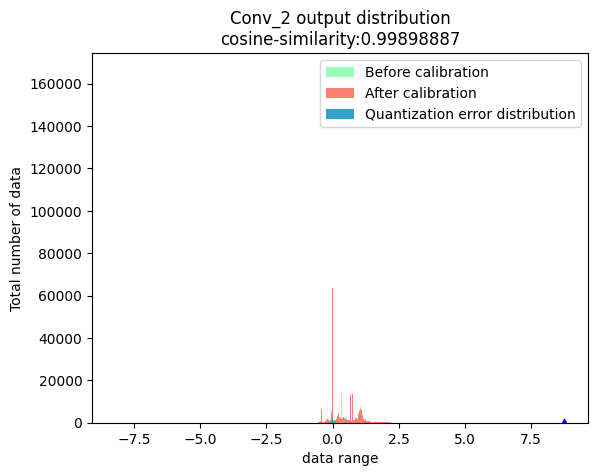

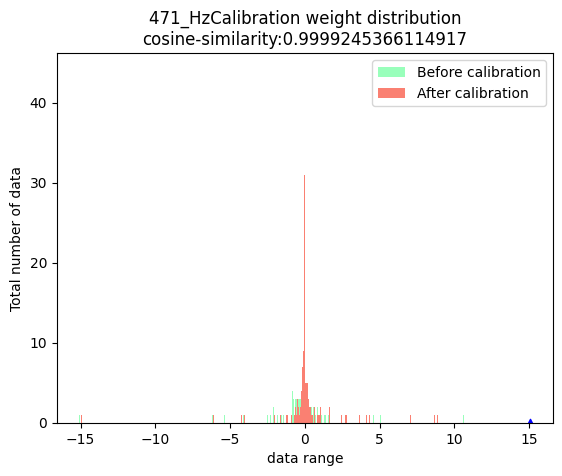

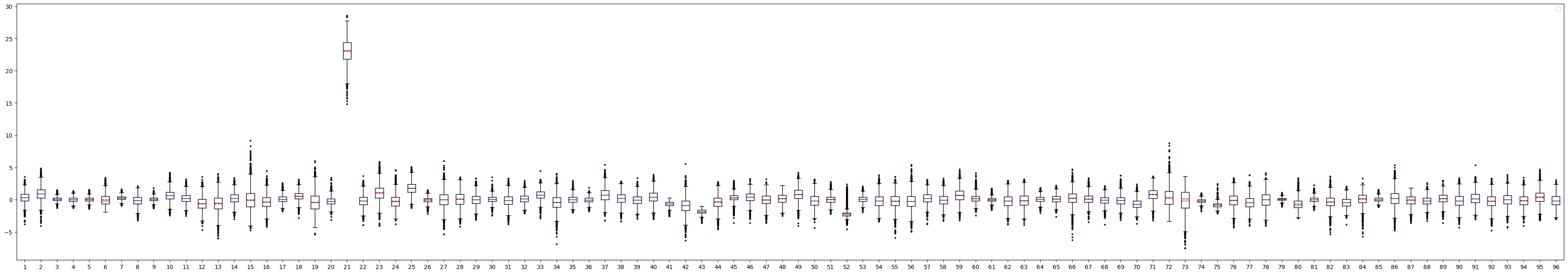

The column of Cosine Similarity reflects the cosine similarity of the output results of the corresponding operator in the Node column between the original floating-point model and the quantization model.

In general, the cosine similarity of output nodes in the model >= 0.99 can be considered to be normal, and if the similarity of output nodes is lower than 0.8, there is obvious loss of accuracy. Of course, Cosine Similarity only provides a reference for the stability of quantized data, and there is no obvious direct relationship with the impact on model accuracy. To obtain accurate accuracy information, you need to read the section Model Accuracy Analysis and Tuning.

The conversion output is stored in the path specified by the conversion configuration parameter working_dir. After the model conversion is successfully completed, you can obtain the following files in this directory (the *** part is specified by the conversion configuration parameter output_model_file_prefix):

- ***_original_float_model.onnx

- ***_optimized_float_model.onnx

- ***_calibrated_model.onnx

- ***_quantized_model.onnx

- ***.bin

The usage of each output is explained in the section Conversion Output Interpretation.

Before running on the board, we recommend that you complete the model performance evaluation and tuning process described in Performance Evaluation of the Model to avoid extending the model conversion issues to the subsequent embedded end.

If any of the three aspects of successful model conversion mentioned above are missing, it indicates that there is an error in the model conversion. In general, the makertbin tool will output error information to the console when an error occurs. For example, when converting a Caffe model without configuring the prototxt and caffe_model parameters in the YAML file, the model conversion tool gives the following prompt:

2021-04-21 14:45:34,085 ERROR Key 'model_parameters' error:

Missing keys: 'caffe_model', 'prototxt'

2021-04-21 14:45:34,085 ERROR yaml file parse failed. Please double check your input

2021-04-21 14:45:34,085 ERROR exception in command: makertbin

If the log information output to the console cannot help you find the problem, please refer to the section Model Quantization Errors and Solutions for troubleshooting. If the above steps still cannot solve the problem, please contact the D-Robotics technical support team or submit your issue in the official D-Robotics developer community, and we will provide support within 24 hours.

Conversion Output Interpretation{#conversion_output}

As mentioned earlier, the successful conversion of the model produces four parts, each of which will be introduced in this section:

- ***_original_float_model.onnx

- ***_optimized_float_model.onnx

- ***_calibrated_model.onnx

- ***_quantized_model.onnx

- ***.bin

The process of generating ***_original_float_model.onnx can refer to the explanation in Conversion Interpretation. The computation accuracy of this model is exactly the same as the original float model used in the conversion input. One important change is the addition of some data preprocessing computations to adapt to the D-Robotics platform (an additional preprocessing operator node called "HzPreprocess" has been added, which can be viewed using the netron tool to open the onnx model. For details about this operator, please see Preprocessing Parameters of Operator HzPreprocess). In general, you do not need to use this model. However, if you encounter abnormal results in the conversion process and the troubleshooting method mentioned earlier does not solve your problem, please provide this model to D-Robotics's technical support team, or submit your questions in the D-Robotics Official Technical Community. This will help you quickly resolve your issue.

The process of generating ***_calibrated_model.onnx can refer to the explanation in Conversion Interpretation. This model is produced by the model conversion toolchain, which optimizes the float model's structure and obtains the quantization parameters for each node by calculating with calibration data, which are saved in the calibration node as intermediate products.

The process of generating ***_optimized_float_model.onnx can refer to the explanation in Conversion Interpretation. This model undergoes some operator-level optimization operations, such as operator fusion. By comparing it with the original_float model visually, you can clearly see some changes at the operator structure level, but these do not affect the model's computation accuracy. In general, you do not need to use this model. However, if you encounter abnormal results in the conversion process and the troubleshooting method mentioned earlier does not solve your problem, please provide this model to D-Robotics's technical support team, or submit your questions in the D-Robotics Official Technical Community. This will help you quickly resolve your issue.

The process of generating ***_quantized_model.onnx can refer to the explanation in Conversion Interpretation. This model has completed the calibration and quantization process. To evaluate the accuracy loss of the quantized model, you can read the content on model accuracy analysis and optimization in the following sections. This model is necessary for accuracy verification. For specific usage, please refer to the introduction in Model Accuracy Analysis and Optimization.

***.bin is the model that can be loaded and run on the D-Robotics processor. With the content introduced in the "Runtime Application Development Guide" section on on-board operation, you can quickly deploy and run the model on the D-Robotics processor. However, to ensure that the model's performance and accuracy meet your expectations, we recommend completing the performance and accuracy analysis process introduced in Model Conversion and Model Accuracy Analysis and Optimization before entering the application development and deployment stages.

In general, the model that can be run on the D-Robotics processor can be obtained after the model conversion stage. However, to ensure that the performance and accuracy of the model meet the application requirements, D-Robotics recommends completing the performance evaluation and accuracy evaluation steps after each conversion.

The model conversion process generates the onnx model, which is an intermediate product for users to verify the model's accuracy. Therefore, it does not guarantee its compatibility between versions. If you use the evaluation script in the example to evaluate the onnx model on a single image or on a test set, please use the onnx model generated by the current version of the tool for operation.

Model Performance Analysis{#performance_evaluation}

This section introduces how to use the tools provided by D-Robotics to evaluate the model's performance. By using these tools, you can obtain performance results that are consistent with actual on-board execution. If you find that the evaluation results do not meet your expectations, it is recommended that you try to solve the performance issues based on the optimization suggestions provided by D-Robotics, rather than extending the model's performance issues to the application development stage.

Performance Evaluation on Development Machine{#hb_perf}

Use the "hb_perf" tool to evaluate the model's performance. The usage is as follows:

hb_perf ***.bin

If the analysis is performed on the "pack" model, it is necessary to add the "-p" parameter, and the command becomes "hb_perf -p ***.bin".

For information about the "pack" model, please refer to the introduction in the other model tools (optional) section.

:::The ***.bin in the command is the quantized model generated in the model conversion step. After the command execution is completed, a hb_perf_result folder will be generated in the current directory, which contains the specific model analysis results.

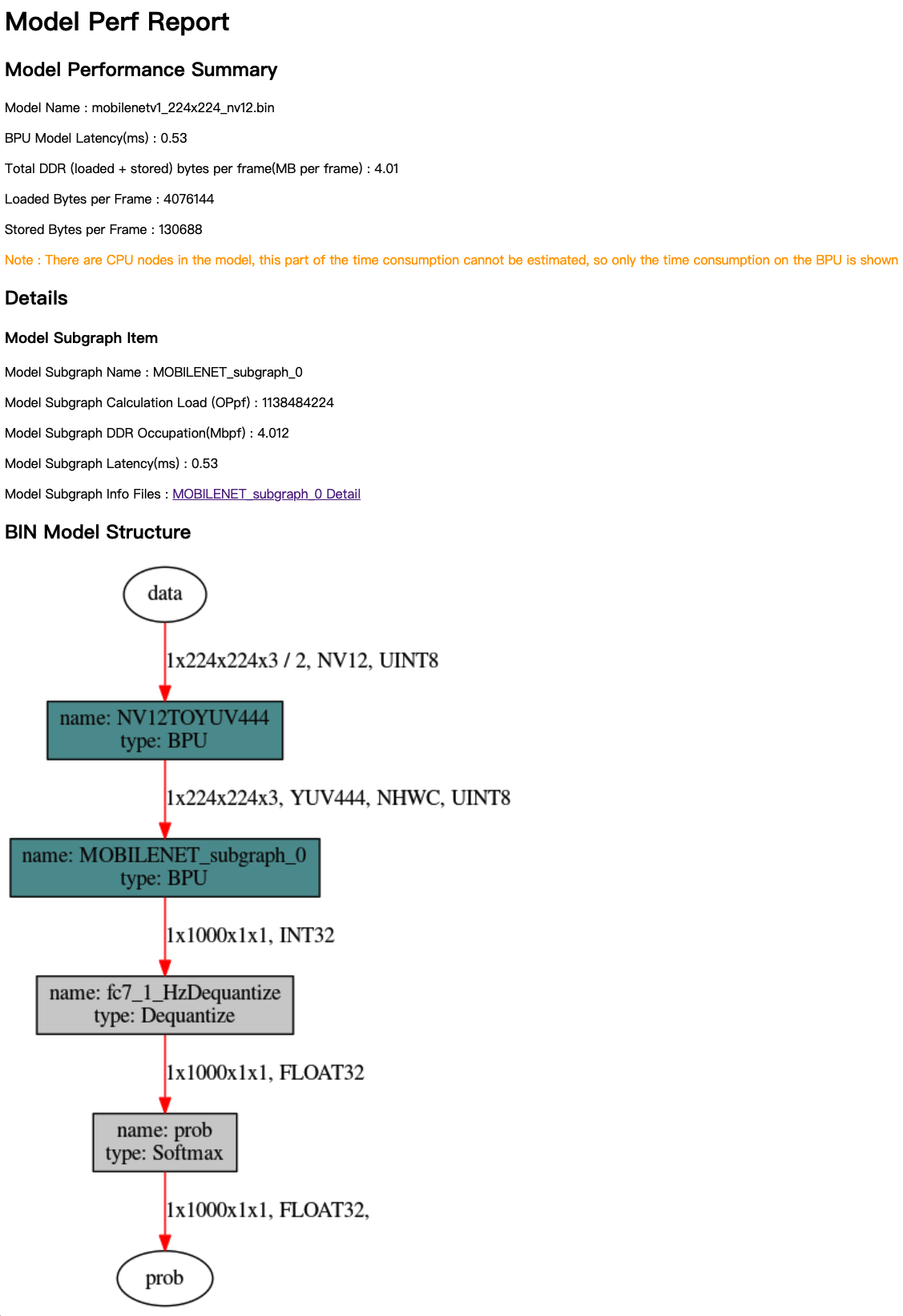

Here is an example of the evaluation results for the MobileNetv1 model:

hb_perf_result/

└── mobilenetv1_224x224_nv12

├── MOBILENET_subgraph_0.html

├── MOBILENET_subgraph_0.json

├── mobilenetv1_224x224_nv12

├── mobilenetv1_224x224_nv12.html

├── mobilenetv1_224x224_nv12.png

└── temp.hbm

Open the mobilenetv1_224x224_nv12.html main page in a browser. Its content is as shown in the following figure:

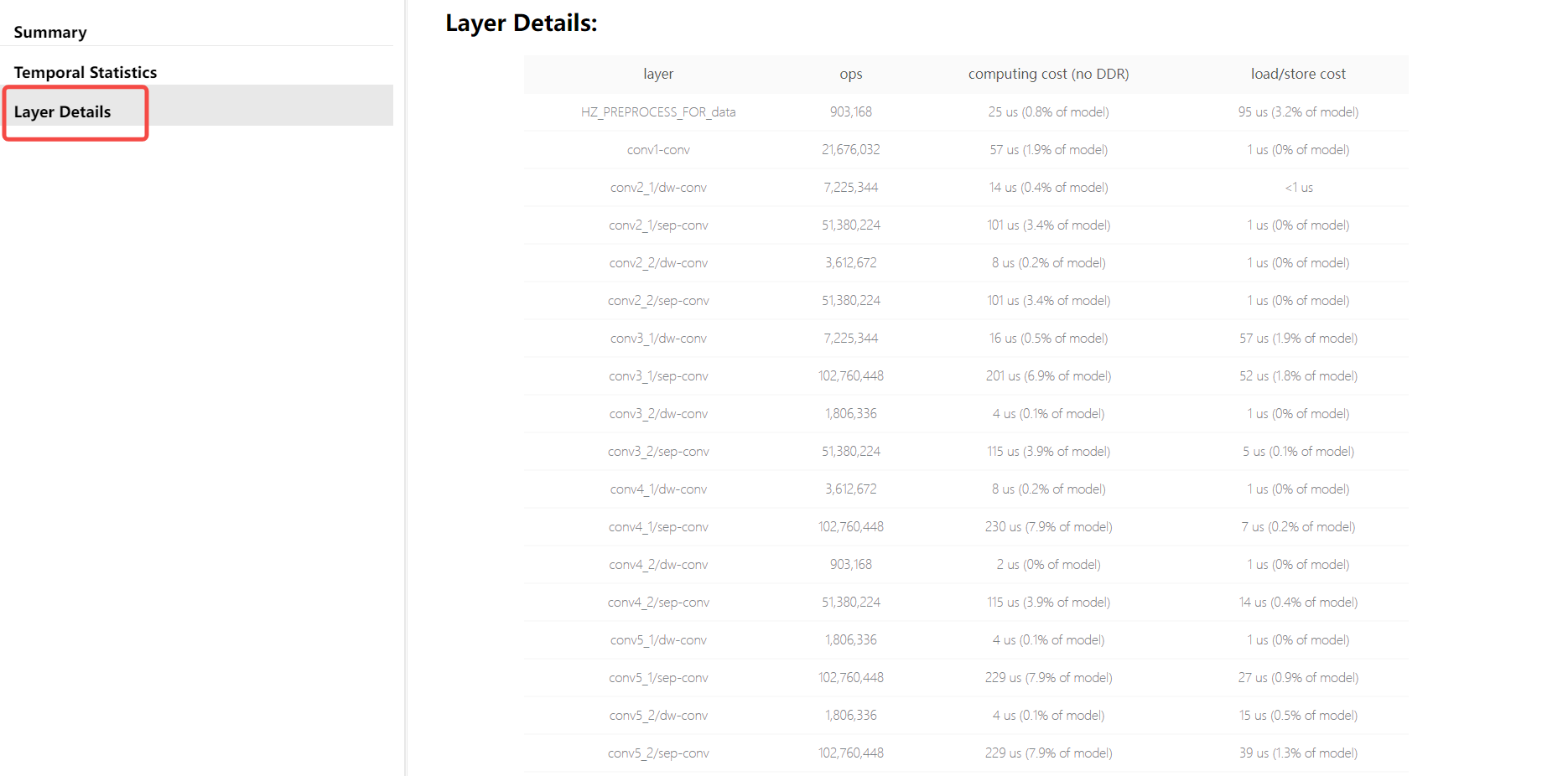

The analysis results mainly consist of three parts: Model Performance Summary, Details, and BIN Model Structure. Model Performance Summary provides an overall performance evaluation of the bin model, with the following metrics:

- Model Name - The name of the model.

- Model Latency (ms) - The overall time taken for calculating one frame of the model (in milliseconds).

- Total DDR (loaded+stored) bytes per frame (MB per frame) - The total amount of DDR used for loading and storing data in the BPU section of the model (in MB per frame).